- Home /

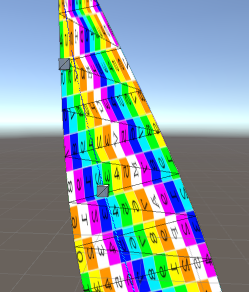

UV problem on procedural mesh generation

Hi,

I am creating a procedural mesh based on a curve and

and this introduce a problem of uv getting zig-zagged when the size of the mesh shrink throughout the curve from one to the other end point.

Here is the code for uv number lay outting.

Vertices.Add(R);

Vertices.Add(L);

UVs.Add(new Vector2(0f, (float)id / count));

UVs.Add(new Vector2(1f, (float)id / count));

start = Vertices.Count - 4;

Triangles.Add(start + 0);

Triangles.Add(start + 2);

Triangles.Add(start + 1);

Triangles.Add(start + 1);

Triangles.Add(start + 2);

Triangles.Add(start + 3);

mesh.vertices = Vertices.ToArray();

//mesh.normals = normales;

mesh.uv = UVs.ToArray();

mesh.triangles = Triangles.ToArray();

Let me know if anybody had a similar problem and resolved it!

Thanks in advance. Jae

Answer by Bunny83 · Sep 07, 2017 at 01:34 AM

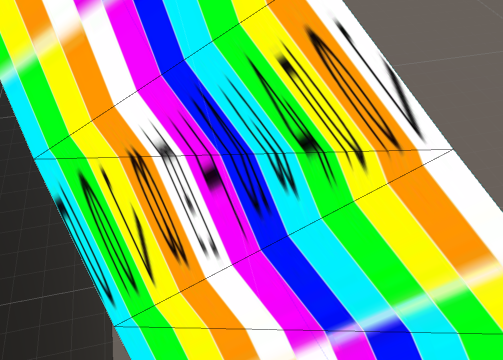

The UV coordinates are linearly interpolated across the triangle. All modern hardware usually implements perspective correct mapping which should take the depth of the actual vertex position into account. This of course only works if the actual positions actually have the same distance and just look smaller due to perspective. If you actually let the geometry to shrink at one end it's not possible for the hardware to calculate the proper corrected coordinates. See this image from wikipedia

The affine mapping does not have or does not know the depth of the two further away points, So the interpolation happens per triangle in screen space. Since the lower left triangle has a longer physical edge the texture appears larger and is interpolated linearly. The upper right triangle has a smaller edge.

If you want to provide geometry that is somehow distorted you may need to provide uv coordinates with 4 components ("strq" or "stpq") instead of two components ("uv" or "st"). The 4 component UV coordinate basically allows you to specify a scale factor for each UV direction for each vertex. This allows you to specify a shrinking factor which is taken into account during interpolation. Keep in mind that all vertex attributes are linearly interpolated across the triangle during rasterization.

See this SO post for an example or this one. Unity now supports 4 component tex-coords

Wow, this is really cool! I've followed all those links, but I'm having trouble understanding the examples: still not quite clear HOW to use this, in general.

"$$anonymous$$eep in $$anonymous$$d that all vertex attributes are linearly interpolated across the triangle during rasterization."

So is this how it works? It just interpolates a point in a triangle, between the three strq Vector4 coordinates (rather than the usual uv-Vector2s), and then uses the result's x,y components, only, to deter$$anonymous$$e the texture coordinate to use at the given point?

I see we can also use vector3's. Hmm, so when would we want to use a vector3 and when a vector4?

"Unity now supports 4 component tex-coords" I've been playing around with this, changing the z,w coordinates of a Vector4 UV coordinate appears to have NO EFFECT when using the standard shader. I've only managed to get it working with a custom shader.

Here is my current standard shader variant, which will perform the de-affine transform, mentioned in those posts. The critical bit is..inside the fragment shader.

if (i.tex.z != 0 && i.tex.w != 0)

i.tex.xy = float2(i.tex.xy) / float2(i.tex.zw);

So, passing 1's into the third and fourth uv0 coordinates, results in no effect. Pass a 0 into either coordinate, will force it to NOT apply the transform.

A bit of other junk had to be done, because the standard-shader Vertex Shader only takes TWO coordinates as a uv0 input.

(Oh, this shader variant also as culling options visible. not relevant here.)

Right, unfortunately non of the built-in shaders actually use homogeneous texture coordinates. I just tried your shader and it seems to be working properly.

To specify the right UVs you just have to think about specifing the "length" of the edges in the 3rd and 4th coordinate. In general something like this:

Vector4(u*L1, v*L2, L1, L2);

So imagine a quad with the points:

-6, -6, 0 // bottom left corner

6, -6, 0 // bottom right corner

-3, 6, 0 // top left corner

3, 6, 0 // top right corner

The length of the vertical edges are the same so we don't have to do anything fancy for the y-axis. However the horizontal edges have different lengths. The bottom edge has a length of 12 the top edge a length of 6.

You can specify the UVs like this:

0*12, 0, 12, 1 // bottom left corner

1*12, 0, 12, 1 // bottom right corner

0* 6, 1, 6, 1 // top left corner

1* 6, 1, 6, 1 // top right corner

Of course you don't have to use the actual length since we just need to communicate the ratio between the edge lengths we can basically "normalize" the factors so the larger one becomes "1" and the smaller one (6 / 12) == 0.5

0*1, 0, 1, 1 // bottom left corner

1*1, 0, 1, 1 // bottom right corner

0*0.5, 1, 0.5, 1 // top left corner

1*0.5, 1, 0.5, 1 // top right corner

Of course if the vertical edges are different as well you would modify the "y" and "w" (or "t" and "q") the same way.

The graphics hardware pipeline actually supports a seperate 4x4 texture matrix just for processing texture coordinates. However those aren't really used nowadays. Usually the texture offset and scale are encoded into the texture matrix. However since Unity doesn't support rotation it encodes the scale and offset into a single vector4 and just does the scaling and offset "manually". In most cases that's actually enough and we don't need a matrix multiplication for this. OpenGL actually has 4 seperate matrices for ("modelview", "projection", "texture" and "color"). See matrix mode. In openGL the modelview is the combination of local2world and camera matrix.

Thanks for the shader. I actually had the same problem some years ago (drawing a non rectangular quad in screenspace) and came across the same problem and found this solution. However back then there was no workaround as we couldn't specify homogeneous texture coordinates because the $$anonymous$$esh class didn't had a way to specify them.

Your answer