- Home /

How to combine a camera rendering an edge detection image effect with another camera

Hello!

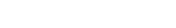

I’m trying to achieve edge detection like in Image A, where external edges are applied using an image effect.

Using the Edge Detection with Roberts Cross Depth Normals in the Standard Assets gets pretty close to this, but the full game is much more complicated and there are edges missing. Increasing the sensitivity causes artefacts in other areas. To have more control (and allow for internal edges), I decided to use a second camera with a replacement shader that renders all the objects’ vertex colours instead (which have been manually set to varying colours), and to run the edge detection on that camera, this time simply checking for difference in colour.

That works great and I get the result seen in Image B.

The issue is combining that camera with the main camera, which is rendering the edgeless models (Image C).

I tried setting the Clear Flags on the edge detection camera to Depth Only and then setting transparency in the image effect shader for the non-outline areas, but that didn’t work.

I essentially need to either make the white pixels transparent on the image effect camera, or multiply the two cameras together, but creating two render textures and multiplying them doesn’t seem like the most efficient solution.

Anyone know how to get this to work? Feels like I'm pretty close and this edge detection will work nicely, I just don't know how to combine the cameras. I can post any shader code if that helps.

Thanks! George

Answer by georgebatchelor · Jul 07, 2018 at 12:10 PM

Managed to figure it out with some help.

The solution was to render out the second edge detection camera to a render texture, create another Image Effect on the main camera and pass the render texture to that as a parameter, then multiply the two together and it works perfectly.

Your answer