- Home /

Encoding geometry information for Sub-Pixel Shadow Mapping

I'm trying to implement the SPSM approach described in this paper: http://jmarvie.free.fr/Publis/2014_I3D/i3d_2014_SubPixelShadowMapping.pdf

First, why am I trying to do this: I am currently using a shadow map, rendered from the view point of a character to display their view cone on the ground. Using regular shadow mapping works fine so far, but produces jagged edges, mainly where obstacles obstruct the view. I hope SPSM might provide better results.

I'm running into problems while doing step 3.3, the encoding of triangle information in the shadow map. There are two distinct problems.

Getting all the information needed into the fragment shader for writing into a render texture.

Encoding the data into a suitable 128bits-RGBA format.

Regarding 1: I need the following data for the SPSM:

All three triangle vertices of the closest occluding triangle

Depth value at texel center

Depth derivatives

I tried getting the triangle information from the geometry shader while calculating the other two in the fragment shader. My first naive approach looks like this:

struct appdata

{

float4 vertex : POSITION;

};

struct v2g

{

float4 pos : POSITION;

float3 viewPos : NORMAL;

};

struct g2f

{

float4 vertex : SV_POSITION;

float4 v0 : TEXCOORD1;

float4 v1 : TEXCOORD2;

float4 v2 : TEXCOORD3;

};

v2g vert(appdata v)

{

v2g o;

UNITY_INITIALIZE_OUTPUT(v2g, o);

o.pos = UnityObjectToClipPos(v.vertex);

return o;

}

[maxvertexcount(3)]

void geom(triangle v2g input[3], inout TriangleStream<g2f> outStream)

{

g2f o;

float4 vert0 = input[0].pos;

float4 vert1 = input[1].pos;

float4 vert2 = input[2].pos;

o.vertex = vert0;

o.v0 = vert0;

o.v1 = vert1;

o.v2 = vert2;

outStream.Append(o);

o.vertex = vert1;

o.v0 = vert0;

o.v1 = vert1;

o.v2 = vert2;

outStream.Append(o);

o.vertex = vert2;

o.v0 = vert0;

o.v1 = vert1;

o.v2 = vert2;

outStream.Append(o);

}

float4 frag(g2f i) : SV_TARGET

{

float4 col;

half depth = i.vertex.z;

half dx = ddx(i.vertex.z);

half dy = ddy(i.vertex.z);

float r1 = i.v0.x; // _ScreenParams.x; <– this ranges from aroung -5 to 5

float g1 = i.v0.y; // _ScreenParams.y;

float r2 = i.vertex.x // _ScreenParams.x; <– this ranges from 0 to 1920

float g2 = i.vertex.y // _ScreenParams.y;

col = float4(r2, g2, 0, 1);

return col;

}

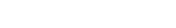

I'm currently rendering the interpolated vertex position as the fragment color for debugging. When rendering it like this I get the following output:

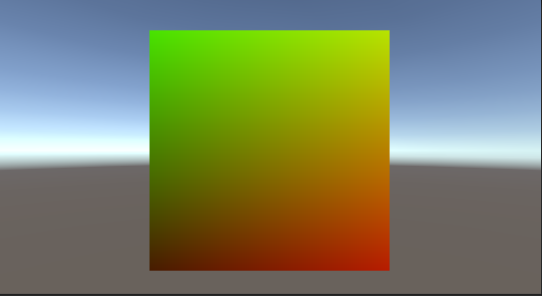

If I render the non-interpolated position of the "first" vertex of each triangle I get the following:

It looks correct so far. What confuses me, is that i.vertex.x has values ranging from 0 to screen width (e.g. 1920) and i.v0.x has value ranging from around -5 to +5. Shouldn't both be at least roughly the same (I know one is interpolated while the other is not) since they are both transformed from object to clip space? Or is the SV_POSITION semantic working some magic behind the scenes?

Regarding 2: My second problem is the actual encoding of the values into a 128bits-RGBA format. The paper describes the encoding very briefly on page 3. Is there a way to pack two half into one float? Or a clever way to bring those values in the range of [0, 1) so I can use Unity's encoding? What about the two derivatives (8bit values)?

Alternatively, I'm very glad for any advice on how to improve the "shadow quality" for the view cone rendering in a different way, apart from using a higher resolution or more shadow maps.

Your answer