- Home /

RenderTexture behaviour inconsistent to Cameras, Rendering two times to a RenderTexture respecting depth, Rendering object visibility

Hi,

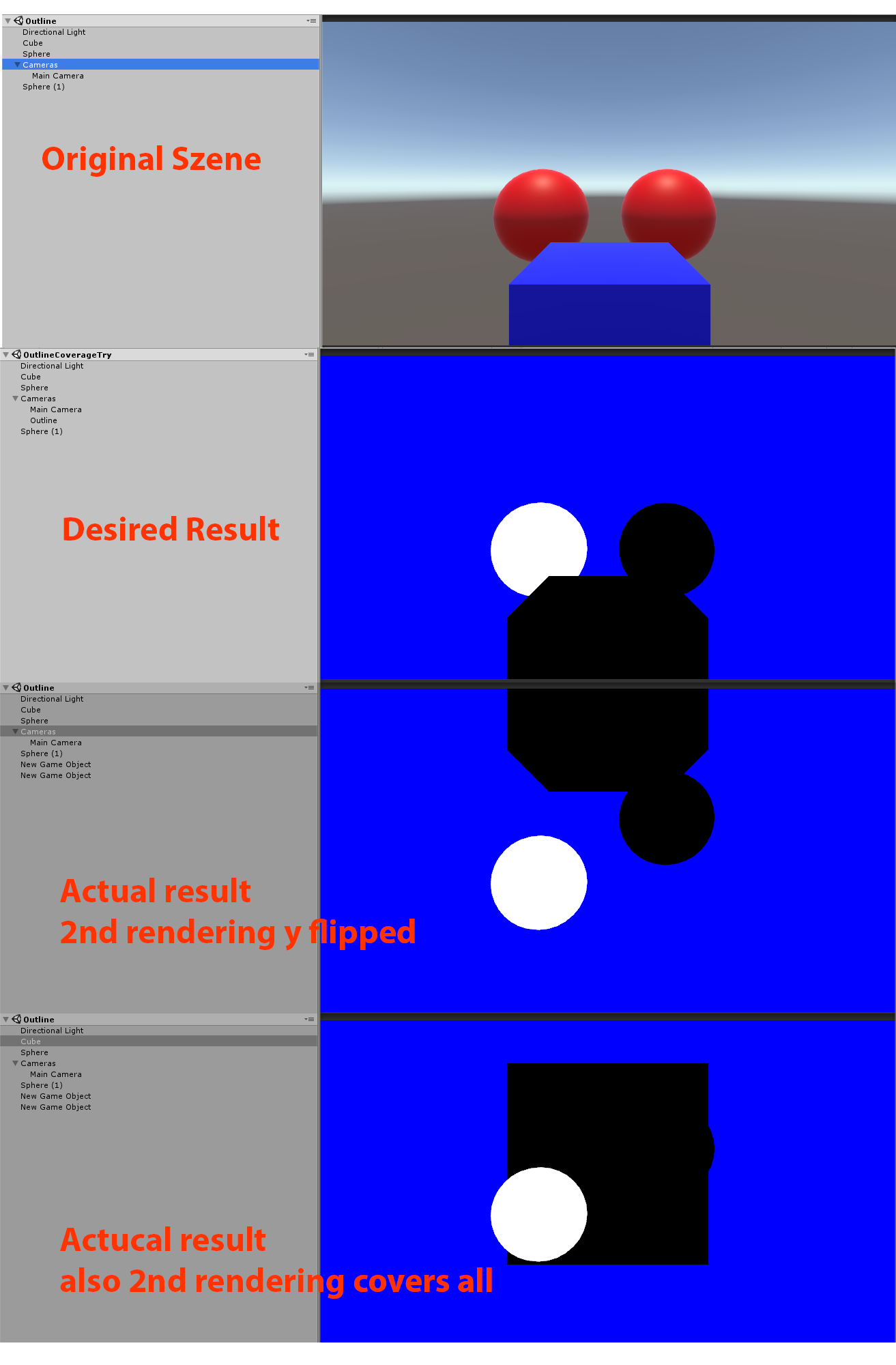

I would like to render a object isolated to black/white mask rendertexture but respecting the occlusion from other objects. This should the foundation for a fancy image effect. The object to be affected is on a unique layer. In the original scene the sphere on the left. So the result should be what a normal Camera would render but the visible objects on that certain layer white and everything else black.

Please see the image below for visual clarfication:

Example Scene / Desired result

I could replicate that desired behaviour with two cameras in scene just fine:

Camera1:

- Culling Mask everything except "special layer"

- Clear: solid color.blue (for now blue so we can see the black rendered objects)

- Depth: 1

Camera2:

- Culling Mask only "special layer"

- Clear: Dont clear - Depth: 2

The shaders on the object are for testing purpose the same as the ones I will inject with Camera.RenderWithShader()

Everything gets rendered how it should be!

Actual result using RenderTexture and instantiated Cameras

When recreating the circumstances from the example scene in my original scene, namely rendering to a rendertexture two times with two cameras that mimick the exact same settings as in the example scene and rendering the rendertexture to screen afterwards I noticed two problems First: The first rendered image is flipped in the y-Direction.

Second: When I move the cube (because of flipping) so it intersects with the left sphere again the sphere is always in front, because it was rendered afterwards, unlike in the example scene where the depth was respected by the second camera.

Question

So maybe someone can point me to a solution on these two issues, explain why the behaviour differs from the expected or propose an alternate way of rendering isolated the objects of an layer to a mask respecting that they might be covered by other objects in front of them.

Code

Here is my code on the camera for spawning two temporary cameras, rendering the scene for every camera to a rendertexture and displaying it on screen afterwards. The Shaders in scene are simple standard shader and the injected shaders are simple unlit ones that render either black or white.

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class PostEffect1 : MonoBehaviour {

Camera AttachedCamera;

public Shader DrawWhite;

public Shader DrawBlack;

Camera TempCam1;

Camera TempCam2;

// public RenderTexture TempRT;

void Start()

{

AttachedCamera = GetComponent<Camera>();

TempCam1 = new GameObject().AddComponent<Camera>();

TempCam1.enabled = false;

TempCam2 = new GameObject().AddComponent<Camera>();

TempCam2.enabled = false;

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

//set up a temporary camera

TempCam1.CopyFrom(AttachedCamera);

TempCam1.clearFlags = CameraClearFlags.Color;

TempCam1.backgroundColor = Color.blue;

TempCam2.CopyFrom(AttachedCamera);

TempCam2.clearFlags = CameraClearFlags.Nothing;

//cull any layer that isn't the outline

TempCam2.cullingMask = 1 << LayerMask.NameToLayer("Outline");

TempCam1.cullingMask &= ~(1 << LayerMask.NameToLayer("Outline"));

TempCam1.depth = 1;

TempCam2.depth = 2;

//make the temporary rendertexture

RenderTexture TempRT = new RenderTexture(source.width, source.height, 16, RenderTextureFormat.Default);

//put it to video memory

TempRT.Create();

//set the camera's target texture when rendering

TempCam1.targetTexture = TempRT;

TempCam2.targetTexture = TempRT;

//render all objects this camera can render, but with our custom shader.

TempCam1.RenderWithShader(DrawBlack, "");

TempCam2.RenderWithShader(DrawWhite, "");

//copy the temporary RT to the final image

Graphics.Blit(TempRT, destination);

//release the temporary RT

TempRT.Release();

}

}

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

//This shader goes on the objects themselves. It just draws the object as white, and has the "Outline" tag.

Shader "Custom/DrawWhite"

{

SubShader

{

Tags{ "RenderType" = "Opaque" }

LOD 100

//ZWrite Off

//ZTest Always

//Lighting Off

Pass

{

CGPROGRAM

#pragma vertex VShader

#pragma fragment FShader

struct VertexToFragment

{

float4 pos:SV_POSITION;

};

//just get the position correct

VertexToFragment VShader(VertexToFragment i)

{

VertexToFragment o;

o.pos = UnityObjectToClipPos(i.pos);

return o;

}

//return white

half4 FShader() :COLOR0

{

return half4(1,1,1,1);

}

ENDCG

}

}

}

Any news about this issue ? We are experiencing the same issue when we render to the same render target with multiple 'RenderWithShader' passes. We are currently "solving" it by flipping the vertices of the render texture every other iteration.

$$anonymous$$ind Regards, CH.

Your answer