- Home /

Custom shaders cause objects to render behind other objects from certain angles

I've been trying to learn how to write shaders, and for the most part it has been going well, as I have achieved my desired effects. However for some reason, every custom shader I have written will randomly render behind other objects when viewed at certain angles.

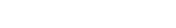

The shader I made is just a simple texture distortion and scrolling effect to make a simple fog effect. In these images the red fog is using the shader, at this angle it renders in front as it should:  However, when viewed slightly to the right, it dissapears behind the grass object:

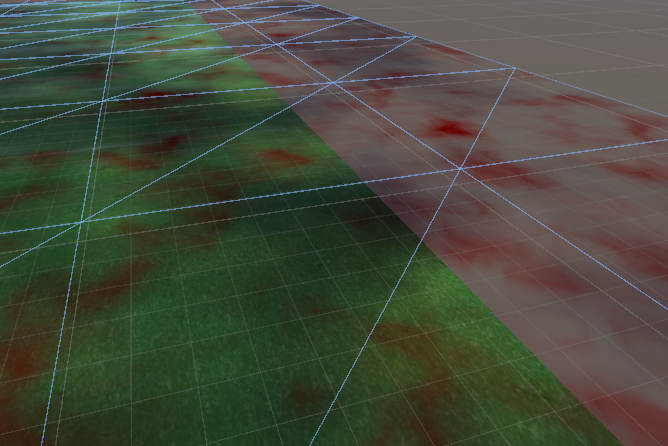

However, when viewed slightly to the right, it dissapears behind the grass object:

I have tried many solutions from what seemed like similar problems on this site and unity forums, I've read up in the documentation about shaderlab and shaders. I have also tried changing the render queue, the ZTest, culling, and ZWrite.

Here's the shader:

Shader "Custom/DistortBytexture" {

Properties {

_Color ("Color", Color) = (1,1,1,1)

_MainTex ("Albedo (RGB)", 2D) = "white" {}

_DistortTex ("Distort Texture (RGB)", 2D) = "white" {}

_XScale ("XScale", Range(-1.0,1.0)) = 0.5

_YScale ("YScale", Range(-1.0,1.0)) = 0.5

_XSpeed ("X Scroll Speed", Range(-1.0,1.0)) = 0.5

_YSpeed ("Y Scroll Speed", Range(-1.0,1.0)) = 0.5

_XSpeedD ("Distort X Scroll Speed", Range(-0.1,0.1)) = 0.05

_YSpeedD ("Distort Y Scroll Speed", Range(-0.1,0.1)) = 0.05

_Alpha ("Alpha", Range(0,10.0)) = 0.5

}

SubShader {

Tags { "RenderType"="Opaque" }

LOD 200

Lighting Off

Cull Off

Zwrite Off

Ztest LEqual

CGPROGRAM

#pragma surface surf Lambert alpha:fade noambient noshadow nodynlightmap

fixed4 _Color;

half _XScale;

half _XSpeed;

half _XSpeedD;

half _YScale;

half _YSpeed;

half _YSpeedD;

half _Alpha;

sampler2D _MainTex;

sampler2D _DistortTex;

struct Input {

float2 uv_MainTex;

float2 uv_DistortTex;

};

void surf (Input IN, inout SurfaceOutput o) {

IN.uv_DistortTex.x += _Time[1] * _XSpeedD;

IN.uv_DistortTex.y += _Time[1] * _YSpeedD;

fixed4 d = tex2D (_DistortTex, IN.uv_DistortTex);

IN.uv_MainTex.x += d.r*_XScale + _Time[1] * _XSpeed;

IN.uv_MainTex.y += d.r*_YScale + _Time[1] * _YSpeed;

fixed4 c = tex2D (_MainTex, IN.uv_MainTex) * _Color;

o.Albedo = c.rgb;

o.Alpha = c.a * c.a * c.a * c.a * _Alpha;

}

ENDCG

}

FallBack "Diffuse"

}

Any help or advice would be greatly appreciated! For all I know, I've made a stupid mistake and missed out a crucial line, however nothing I tried worked so I caved in and am asking here. Thanks in advance! :)

Answer by JonnyHilly · Aug 26, 2019 at 04:03 AM

It helps to know some general rendering principals when figuring out how to fix these things. You may know these already, but sometimes helps to go over them. first off solid (opaque) geometry is rendered in front to back order. (closest to camera first) far object won't render if Z-clipped by the earlier drawn close objects. Next transparent objects are drawn... they are drawn in reverse order... back to front (furthest first) they won't draw over solid geometry if the Z distance is further. But they will draw over other transparent objects. Now the main problem here is... how do you define closer or further.... when on a pixel by pixel basis... its easy... but when going through one object at a time.. you have to generalize and usually pick a point on the object... unity probably uses the center of the object. IMPORTANT :- it doesn't sort each polygon's z range it sorts by object Z range ...

Now consider this in reference to your project.. probably you have an object where depending on your view angle... its center causes it to draw before or after the other object.... Z-buffer pixel by pixel distance check is still done... BUT the order the objects are drawn can change... which can affect the display, especially with semi transparent objects.. With that in mind... you may have several ways to fix things..... 1) put you 2 objects on different layers... and have different cameras draw each layer... and use camera render order to force the draw order. 2) if one is solid and the other transparent... the transparent one will always render after. 3) if both are transparent, you could combine the layers into a single shader, with pass1, and pass2 rendering the layers. (or possibly in 1 pass but with consistent color combining) 4) check the size / shape of your objects and the center point... to see if that is causing your issue... you 'might' be able to alter the shape of the geometry so one center is always consistently closer to the camera.

Your answer

Follow this Question

Related Questions

Blender's Set Solid in Unity 1 Answer

How to write an additive HDRP shader without glow 0 Answers

U5 shader coming out solid black on newer iOS devices (iPad 2 Air / iPhone 6) 1 Answer

Universal Render is stuck applying Cyan 1 Answer

Scene Color Node in Shader Graph not working with Unity's 2D Renderer and URP 5 Answers