- Home /

How do I decode a depthTexture into linear space to get a [0-1] range in HLSL?

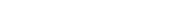

I've set a RenderTexture as my camera's target texture. I've chosen DEPTH_AUTO as its format so it renders the depth buffer:

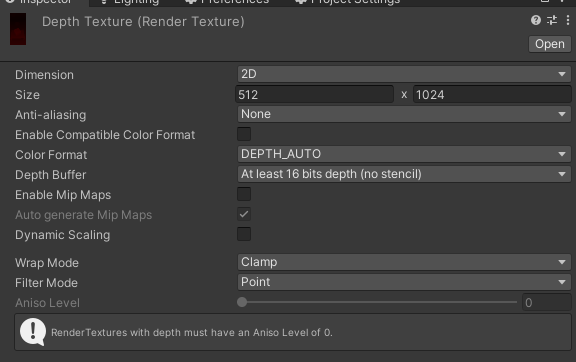

I'm reading this texture in my shader, with float4 col = tex2D(_DepthTexture, IN.uv); and as expected, it doesn't show up linearly between my near and far planes, since depth textures have more precision towards the near plane. So I tried Linear01Depth() as recommended in the docs:

float4 col = tex2D(_DepthTexture, IN.uv);

float linearDepth = Linear01Depth(col);

return linearDepth;

However, this gives me an unexpected output. If I sample just the red channel, I get the non-linear depthmap, and if I use Linear01Depth(), it goes mostly black:

Question: What color format is chosen when using DEPTH_AUTO in the Render Texture inspector, and how do I convert it to linear [0, 1] space? Is there a manual approach, like a logarithmic function, or an exponential function that I could use?

Your answer