- Home /

Grab Pass alternative? Frag shader that applies alpha values behind it to itself.

Hi!

I was wondering if there was a more efficient way to utilize a grab pass? Current model makes it very expensive and adds a draw call for every use. I am not very experienced in shaders, and I just need to implement a simple frag shader that assumes the alpha value of every object behind it (think grab pass grayscale alpha channel):

Let's say we have a scene like this, with a plane that has our shader+material combo, and a camera that points perpendicular to our plane:

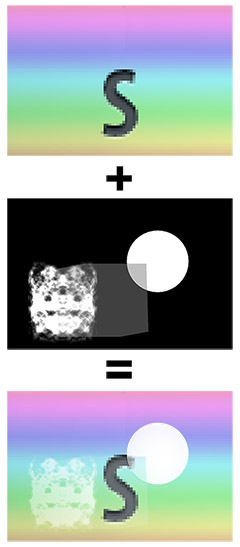

Now, if we were to add a semi-transparent materials to our objects, we want the plane to see whats behind it (relatively to the Main Camera, of course), and set the total alpha value through a fragment shader to itself, like this:

The resulting mask is a grayscale alpha channel:

And the final result should look like this from the Scene view:

It is a very simple concept, but resulted grab pass shader was clunky and required layering to get the correct alpha (am I doing something wrong?). That is why I need just a simple frag shader that will allow me to modify/recalculate the alpha.

So how does that data input works in a shader? I always thought of shaders as a simple texture processor, but with grab pass it seems way more than just that. I have several books about shaders, including the cg prog tutorial by nvidia, and none of them explain how does data get in and out in simple terms. Or how the color calculations are made. Or detailed explanation of texture combiners, etc etc.

Please help or give some advice to a shader beginner! Would GREATLY appreciate your help. Long live Unity!

The usual way shaders work is they don't have any access to the "existing" pixel. Your shader code can set your pixel's depth, depth test and alpha. But that's it. The non-programmable hardware looks up the old pixels and decides whether to use/not-use/blend.

That's what the grab pass is for. The "existing" pixel, which is now merely a texture, is now lookupable.

What if I put the 100% transparent plane in the very back; It will eventually get the info i need at the frag stage, wouldn't it? Afterwards I can layer it appropriately and with queue/offsets I can pass the alpha layer to my texture?

Will that work, Owen?

If you draw a screen-sized plane last, sure, the old pixels will already be computed, but you still aren't allowed to look at them (without a grab pass.)

If this was a CPU, you could figure out a way to look up old work, but graphics cards just don't work that way (if they did, no one would ever need a grab-pass.)

I thought the OpenGL red book had the best explanation, or look up "GPU pipeline" for all the steps.

Answer by Jde · Aug 27, 2013 at 02:15 PM

Looks like your best bet would be to use a RenderTexture combined with Camera.RenderWithShader instead of a GrabPass. The replacement shader used by RenderWithShader could be one which only displays the alpha channels of rendered objects.

The resulting RenderTexture would then be a greyscale mask image (like the one you show above) which you could use in your main shader if you plug it in to a texture slot via script.

Your answer