- Home /

Is there any way to determine Unity's actual target frame rate?

My code allows the user to choose how many calculations are performed per second. I have a coroutine that can yield return many times in a row if the number selected is low, or it can perform the calculation many times in a row without yielding if the number selected is high. In order to do that, my code needs to know the time between yields. So I do something like this:

timeWaited += Time.deltaTime;

However, if the number of calculations is very high, Time.deltaTime could grow larger, and the larger Time.deltaTime is the more calculations would be performed. It's a vicious cycle that could easily crash my program. So instead of using Time.deltaTime I want to use the frame rate that Unity is trying to achieve rather than the one it happened to have on that frame.

timeWaited += targetDeltaTime;

I can do better than that, though. With that code I can avoid a vicious cycle, but it still has no way to recover if the calculations on each frame are too long every frame. So I can help by making it perform fewer calculations when Time.deltaTime is high.

timeWaited += targetDeltaTime * targetDeltaTime / Time.deltaTime;

But how do I get targetDeltaTime? According to the Application.targetFrameRate documentation, Unity's target frame rate varies by platform. Does Unity have a way to return that platform-specific target frame rate? Ideally there would be some function that would return Application.targetFrameRate if it's in effect (is not set to -1 and QualitySettings.vSyncCount is set to 0), or the platform's default frame rate if Application.targetFrameRate is being ignored.

EDIT: As requested, here is more of my code.

public IEnumerator SearchDepthFirst ()

{

// ...

while ( nodesRemain )

{

// ...

while ( ShouldYield() )

{

yield return null ;

}

// ...

}

// ...

}

private bool ShouldYield ()

{

// ...

if (SecondsWaited < SecondsPerNode)

{

SecondsWaited += SecondsPerFrameSquared / Time.deltaTime ;

return true ;

}

else

{

SecondsWaited -= SecondsPerNode ;

return false ;

}

}

Answer by Bunny83 · Nov 26, 2017 at 12:43 AM

First of all it can't crash your game since Time,deltaTime has a max value of 0.3333 (or 0.3 can't remember). So even when a frame takes longer than that value, deltaTime will not grow above this value.

You may want to have a look at my CustomFixedUpdate helper class which does pretty much the same thing as Unity does for it's own FixedUpdate, but can run at any framerate you like. It also has a safety check (which is disabled by default since Time.deltaTime is already limited).

If you actually don't require / want a certain number of iterations per second but just a "load limiter" you may just want to check Time.realTimeSinceStartup each iteration.

To me this line make no sense:

timeWaited += targetDeltaTime * targetDeltaTime / Time.deltaTime;

This will make the time step even smaller when the framerate goes down. So if you yield based on the passed "timeWaited" you will actually do more calculations when the framerate goes down. I think you should post more code about your actual logic in your coroutine.

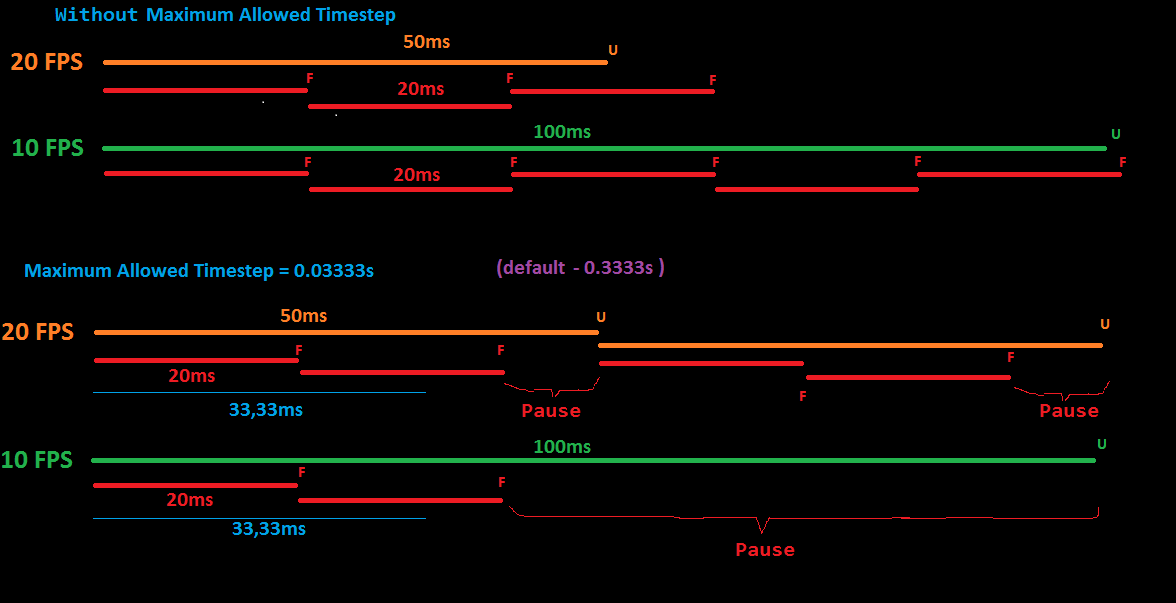

This makes it look like $$anonymous$$aximum Allowed Timestep only affects FixedUpdate.

Picture is part of my explanaion of Time class. F - Physic loop, U- main loop.

$$anonymous$$aximum Allowed Timestep affects physics loop. Physics loop must be always be invoked in fixed timesteps measured in game time (Time.time). If main loop (in which is Update) is invoked rarery we will see jittering movements of objects because physic loop will be updated few times. To prevent that we must stop invoking physic loop. But physic loop must be invoked in fixed timesteps. So we for a moments stop measuring time. $$anonymous$$aximum Allowed Timestep deter$$anonymous$$e when to stop time for moment. If time between Updates is greater then $$anonymous$$aximum Allowed Timestep then we stop time for moment.

This will make the time step even smaller when the framerate goes down.

Did you mean "when the framerate goes up"? Remember that frame rate and deltaTime are inverses. If I divide by deltaTime, that means the bigger deltaTime is the fewer calculations I'll perform and vice versa.

No i did mean it the way i said. However now that you have posted your ShouldYield method i can only say, it makes no sense. You have your return values reversed. As long as "SecondsWaited" is smaller than "SecondsPerNode" you currently yield. So you yield every iteration until SecondsWaited is equal or greater. In this case you do not yield one time. This makes not much sense. This will execute one iteration once every frame and from time to time you do two iterations in one frame. The other way round would make more sense.

However as i said the lower the frame rate gets (that means deltaTime gets larger), the slower you reach your timeout value. $$anonymous$$eep in $$anonymous$$d that you divide the value you're going to add to your wait time by deltaTime. So the bigger deltaTime is, the smaller the change of your SecondsWaited variable gets each iteration. So it requires more iterations to actually hit your limit.

Well getting into the $$anonymous$$utia of my implementation is a bit of a tangent here and doesn't have much to do with my question, but I'll try to explain. $$anonymous$$y code may be hard to understand because it's been complicated by my slowdown-correction code. So to explain it more easily, imagine that the line in question reads:

SecondsWaited += Time.deltaTime ;

You are correct that I yield every time SecondsWaited is smaller than SecondsPerNode. SecondsPerNode is something the user is able to influence directly to slow down or speed up the process. Remember that the point of this admittedly strange-looking algorithm is to allow either one calculation in many frames or many calculations in one frame or anything in between depending on the value of SecondsPerNode.

So let's say the user wants is really slow to see what's going on. SecondsPerNode will be really high, so it will take a long time for SecondsWaited to get bigger than SecondsPerNode. Once it does, SecondsWaited will go down to something close to zero and ShouldYield will return false meaning the inner while loop will end and one calculation can occur. In the mean time, SecondsWaited grows by a little bit each frame until it's big enough to trigger a calculation.

Now let's say the user doesn't want to wait and so SecondsPerNode is really low. After the first yield, SecondsWaited will be much larger than SecondsPerNode and so ShouldYield() will return false many times in a row, allowing many calculations in one frame. After each calculation ShouldYield() is called and SecondsWaited shrinks a little smaller until finally it's smaller than SecondsPerNode and the coroutine can yield until the next frame.

Let me know if that explanation makes sufficient sense before I explain why I'm now dividing by Time.deltaTime.

Your answer

Follow this Question

Related Questions

Using Time efficiently regardless of current frame rate. 0 Answers

How does Time.deltaTime provide smoother physics functions? (Frame rate question) 2 Answers

Having trouble increasing the spawning speed of explosions 0 Answers

Do i need to use Time.Deltatime while using mouse axes for rotation? 1 Answer