- Home /

Why does Convert.ToDecimal use Double.ToString internally?

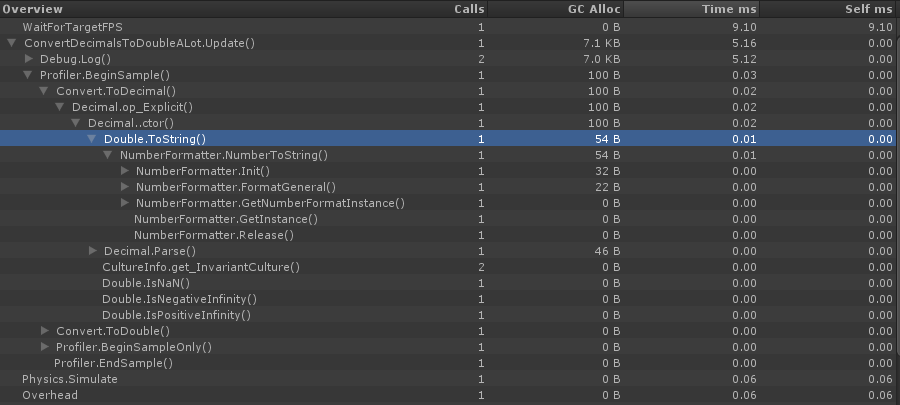

I'm focused on improving the performance of a project and noticed this very strange behavior. When converting a Double to a Decimal, internally, the Convert code calls on Double.ToString!?! This actually is throwing off a fair amount of memory in my project because it gets called 30-40 times a frame. I can probably refactor away from using the Convert, but this internal behavior really surprises me.

Convert.ToDecimal(theDouble);

The Profiler:

Answer by whydoidoit · Mar 03, 2014 at 06:30 PM

Well this is the code that Decimal uses to convert a double in the version we get with Unity:

/// <summary>

/// Initializes a new instance of <see cref="T:System.Decimal"/> to the value of the specified double-precision floating-point number.

/// </summary>

/// <param name="value">The value to represent as a <see cref="T:System.Decimal"/>. </param><exception cref="T:System.OverflowException"><paramref name="value"/> is greater than <see cref="F:System.Decimal.MaxValue"/> or less than <see cref="F:System.Decimal.MinValue"/>.-or- <paramref name="value"/> is <see cref="F:System.Double.NaN"/>, <see cref="F:System.Double.PositiveInfinity"/>, or <see cref="F:System.Double.NegativeInfinity"/>. </exception>

public Decimal(double value)

{

if (value > 7.92281625142643E+28 || value < -7.92281625142643E+28 || (double.IsNaN(value) || double.IsNegativeInfinity(value)) || double.IsPositiveInfinity(value))

{

string fmt = "Value {0} is greater than Decimal.MaxValue or less than Decimal.MinValue";

object[] objArray = new object[1];

int index = 0;

// ISSUE: variable of a boxed type

__Boxed<double> local = (ValueType) value;

objArray[index] = (object) local;

throw new OverflowException(Locale.GetText(fmt, objArray));

}

else

{

Decimal num = Decimal.Parse(value.ToString((IFormatProvider) CultureInfo.InvariantCulture), NumberStyles.Float, (IFormatProvider) CultureInfo.InvariantCulture);

this.flags = num.flags;

this.hi = num.hi;

this.lo = num.lo;

this.mid = num.mid;

}

}

And here is the heart of the parser:

private static bool PerformParse(string s, NumberStyles style, IFormatProvider provider, out Decimal res, bool throwex)

{

NumberFormatInfo instance = NumberFormatInfo.GetInstance(provider);

int decPos;

bool isNegative;

bool expFlag;

int exp;

s = Decimal.stripStyles(s, style, instance, out decPos, out isNegative, out expFlag, out exp, throwex);

if (s == null)

{

res = new Decimal(0);

return false;

}

else if (decPos < 0)

{

if (throwex)

throw new Exception(Locale.GetText("Error in System.Decimal.Parse"));

res = new Decimal(0);

return false;

}

else

{

int length1 = s.Length;

int startIndex = 0;

while (startIndex < decPos && (int) s[startIndex] == 48)

++startIndex;

if (startIndex > 1 && length1 > 1)

{

s = s.Substring(startIndex, length1 - startIndex);

decPos -= startIndex;

}

int length2 = decPos != 0 ? 28 : 27;

int length3 = s.Length;

if (length3 >= length2 + 1 && string.Compare(s, 0, "79228162514264337593543950335", 0, length2 + 1, false, CultureInfo.InvariantCulture) <= 0)

++length2;

if (length3 > length2 && decPos < length3)

{

int num1 = (int) s[length2] - 48;

s = s.Substring(0, length2);

bool flag = false;

if (num1 > 5)

flag = true;

else if (num1 == 5)

flag = isNegative || ((int) s[length2 - 1] - 48 & 1) == 1;

if (flag)

{

char[] chArray = s.ToCharArray();

int index;

for (index = length2 - 1; index >= 0; chArray[index--] = '0')

{

int num2 = (int) chArray[index] - 48;

if ((int) chArray[index] != 57)

{

chArray[index] = (char) (num2 + 49);

break;

}

}

if (index == -1 && (int) chArray[0] == 48)

{

++decPos;

s = "1".PadRight(decPos, '0');

}

else

s = new string(chArray);

}

}

Decimal val;

if (Decimal.string2decimal(out val, s, (uint) decPos, 0) != 0)

{

if (throwex)

throw new OverflowException();

res = new Decimal(0);

return false;

}

else if (expFlag && Decimal.decimalSetExponent(ref val, exp) != 0)

{

if (throwex)

throw new OverflowException();

res = new Decimal(0);

return false;

}

else

{

if (isNegative)

val.flags ^= (uint) int.MinValue;

res = val;

return true;

}

}

}

It's a very simple implementation and it is different to the current .NET version. I guess the question is - can you get away without using Decimals - they're pretty expensive even without this.

By the way - I strongly suggest getting hold of a decompiler when you are looking at this stuff, it's the only way to know what is really going on. I use dotPeek from JetBrains.

Thanks! I've never really gotten into the decompiler stuff. Thanks for confir$$anonymous$$g what I suspected.

Your answer

Follow this Question

Related Questions

Memory Managmenet 1 Answer

Performance and memory 1 Answer

Reasonable heap alloc. per second and total ? 0 Answers

(Solved) What is done in preloading ( Application.LoadLevel(x) ) 2 Answers