- Home /

How do I get mesh data into post-processing shader (or render a mesh shader to a texture)?

I have created a water ripple effect to distort surfaces. Right now it creates two texture buffers and ping-pongs between them as in this openFrameworks addon:

https://github.com/patriciogonzalezvivo/ofxFX

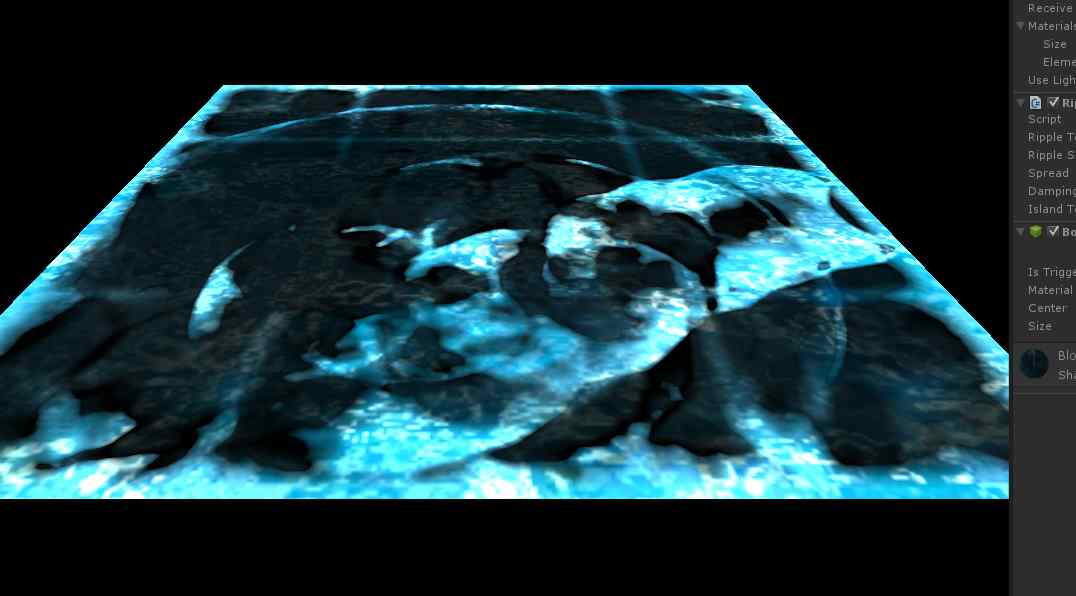

I use graphics.Blit() in the update loop to apply the affect, inputting the buffers and writing to a texture that I use as a normal map (which is how the eventual effect is displayed). Unfortunately the powers that be have decided that they want this effect not on flat planes, but on this big-old deforming mesh we have in the game. Applying the normal-map texture I have generated to this mesh is easy, however I need to occasionally disturb the texture to create the ripples.

For this, I need the uv coordinates of some point on the mesh to feed to my shader. A mesh collider is not possible, as the mesh deforms (also, that would be SUPER expensive). The only way I can think of to do this is to bake the mesh every frame, loop through the vertices to find the closest one in screen space (or maybe just use a compute shader), then look up the appropriate uv coordinates, THEN send that to my shader.

Either that or I need to attach my effect to the mesh itself, but either way I need to render to a texture, which does not seem possible outside of the Graphics.Blit functionality. All in all, this seems ridiculously complicated, considering all I need is a single texture coordinate based on a screen/object/or world space coordinate on a mesh (really any of the three coordinates would do). This information is clearly somewhere within Unity. How do I get to it?

The ripple effect, on a plane.

![]()

The 3D model to which I need to apply this effect.

Your answer

Follow this Question

Related Questions

CustomRenderTexture ignores "ComputeScreenPos" 1 Answer

Pass "external" uv's to shader 0 Answers

Accessing uv without a texture 1 Answer