- Home /

What is the meaning of a vertex shader for a post-processing shader?

From my understanding a vertex shader is applied to each vertex in its local space. I tried adding a simple post-processing shader and removing the 'unityObjectToClipPos' line in the vertex shader. This resulted in the scene being rendered at 1/4 the size. Does anyone know why this happens? What exactly are the vertices that get passed to the vertex shader in this context? I've added the shader code, the script i've attached to the camera, and the before and after of removing the MVP multiplication.

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/DepthGrayscale" {

Properties

{

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

// No culling or depth

Cull Off ZWrite Off ZTest Always

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

float4 vertex : SV_POSITION;

};

v2f vert (appdata v)

{

v2f o;

o.vertex = v.vertex;

o.uv = v.uv;

return o;

}

sampler2D _MainTex;

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

// just invert the colors

col.rgb = 1 - col.rgb;

return col;

}

ENDCG

}

}

}

Script attached to camera object:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class PostProcessDepthGrayscale : MonoBehaviour

{

public Material mat;

void Start()

{

GetComponent<Camera>().depthTextureMode = DepthTextureMode.Depth;

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

Graphics.Blit(source, destination, mat);

}

}

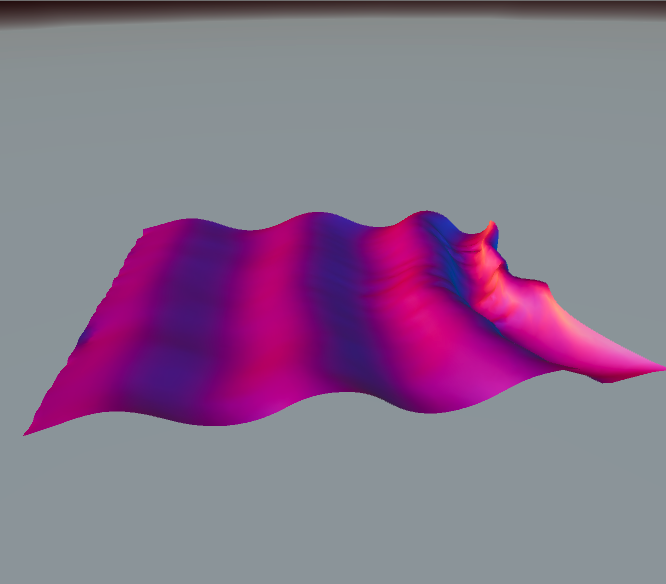

Before removing MVP multiplicaction:

After:

Answer by Namey5 · Aug 14, 2020 at 05:06 AM

You aren't wrong exactly, but in this case 'transforming to clip-space' probably isn't doing what you think it is. When we display a 3D object on screen, it's screen-relative coordinates are said to be in NDC space (normalised device coordinates) which range from [-1,1] on all axes - this isn't 100% true for all platforms though. Depending on the rendering API you are using, the y-axis can be flipped and the z-axis can be multiple different things. When mapping a 3D object to screen, we multiply its object-space coordinates by the MVP matrix (transforming them into world space, then view space, and finally clip space which will be converted into NDC space by performing a perspective-divide, which is done automatically between the vertex and fragment shader). In the case of a post-processing effect, converting between all these spaces isn't necessary - we can just define the coordinates in NDC space and use those, like you would be doing here. However, in doing so you would need to take into account the differences per platform that would ordinarily be handled by the matrices themselves. As a result, I would imagine that the screen-space blit quad is defined from [0,1] (hence it only taking a quarter of the screen here) and the MVP matrix is overridden to a simple transformation matrix that just scales those positions and adjusts for platform specific differences.

Thanks I think that perfectly explains why it's taking up a quarter of the screen. I'm still unsure about what the vertices are in this case though.

Unity is a 3D engine, and so everything (including 2D objects) is drawn as a 3D mesh. Graphics.Blit under the hood would look something like this;

static void Blit (RenderTexture src, RenderTexture dest, $$anonymous$$aterial mat, int pass = 0)

{

$$anonymous$$atrix4x4 mvp = $$anonymous$$atrix4x4.Translate (new Vector3 (-1f, -1f, 0f)) * $$anonymous$$atrix4x4.Scale (new Vector3 (2f, 2f, 1f));

//'GetGPUProjection$$anonymous$$atrix' converts the supplied projection matrix based on the rendering API

//I have no idea how it would work on a regular transformation matrix like this, but you get the idea

mvp = GL.GetGPUProjection$$anonymous$$atrix (mvp);

Shader.SetGlobal$$anonymous$$atrix ("UNITY_$$anonymous$$ATRIX_$$anonymous$$VP", mvp);

mat.SetTexture ("_$$anonymous$$ainTex", src);

mat.SetPass (pass);

Graphics.SetRenderTarget (dest);

//Where 'm_Quad$$anonymous$$esh' is a mesh with vertices;

// bottom left - [0,0]

// top left - [0,1]

// top right - [1,1]

// bottom right - [1,0]

Graphics.Draw$$anonymous$$eshNow (m_Quad$$anonymous$$esh, $$anonymous$$atrix4x4.identity);

}

Obviously there's a few things wrong here, but this is pretty much how you would go about making your own Blit function (it comes in handy more than you would hope).

Awesome that clears everything up, much appreciated!

Your answer