- Home /

Make triplanar mapped textures stay in place?

Hi everybody!

My goal is to really quickly texture objects that have been created by slapping multiple primitives together (really tight time constraints on this project).

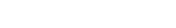

In order to avoid UVs, I'd like to texture them using a triplanar mapping approach, based on worldspace-coordinates of the objects. This way, no matter how I stretch the primitives, the seamless textures always stay un-stretched. So far that works and looks like this:

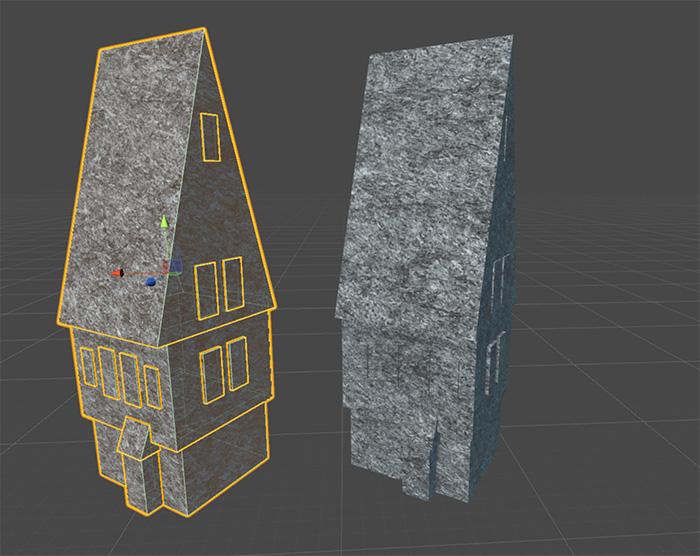

However, the objects will move during runtime, and since I'm using worldspace coordinates for the triplanar mapping, the textures don't move with the objects, like in this GIF:

Anybody got any ideas on how I could make the textures move with the objects? Like some way to bake them onto the models when the scene is loaded? (I can't just use objectspace-coordinates for the mapping, since that will result in stretching of the textures)

Thanks in advance!

Textures mapped using worldspace coordinates, by definition, remain fixed to positions in the world. If your object moves through the world, it will inherit the texture of the position it moves into. If you want to map your texture onto your object without relying on UV-wrapping, you might want to try object-space coordinates ins$$anonymous$$d.

Answer by FortisVenaliter · May 11, 2017 at 03:37 PM

You would have to do the triplanar mapping in the vertex shader to get that effect... otherwise you'll be writing the model to the GPU every frame which will kill performance.

Look into the shader language cG. Then import the Old diffuse shader (much easier to modify than the new standard one) and apply your algorithm to the vertex shader so it calculates the texture coords dynamically. The pixel shader should work without modification.

Thanks for your reply! I'm doing the calculations for the triplanar mapping in vertex shader (written in cG). However, using the objectspace coordinates of vertices as texture-coordinates results in the texture being stretched. Using worldspace coordinates of vertices results in the texture not moving with the object, as seen in the GIF in my original post.

Ah, I thought you were shooting for the opposite effect. In that case, I would set it up to generate the Texture coords on the CPU in the editor. Add an editor script that can change the UVs, run it in the editor, then use a regular shader. That way, once the objects are scaled properly, you can apply the texture coordinates properly, but they'll stay static in the game.

I agree with the "bake" method: Create your model in the scene, at position 0,0,0. Then modify it's uv's with your mapping algorithim ONCE, then save the mesh asset which now statically contains the appropriate UVs. You could also perform that entire process during initialization (slower loading but less dev steps).

Hey sorry to necro this post, but can you share more info on how to move th UVs so that they adapt to the world position in order to "bake" the result. How do I know what position should I move each UV to?

Your answer