- Home /

Help with Neural Networks and Threading

Hi everyone, I've been working on an Artificial Life simulator of late as a sort of pet project. I've been working on building neural networks for the creatures brains, networks that can take in inputs such as raycasts or colliders and output values to "jets" or other force applicators. The neural network seems to work well enough, but my biggest problem is in optimizing them. Put simply, it takes too long, and even as few as five creatures lags my machine so much its unplayable. Even neural networks with as few as three neurons do this.

In an attempt to speed up the processing time, I've been working on a solution that involves multithreading; the code is below. I used this answer to build the thread code, but I am seeing no improvements in performance or the number of creatures I can have on the screen. I'm looking for any tips on optimization and threading. I'm mostly self-taught so if my documentation is bad please let me know and I'll clean it up. Thanks!

Thread class: public class ThreadedJob {

private bool m_IsDone = false;

private object m_Handle = new object();

private System.Threading.Thread m_Thread = null;

public bool IsDone

{

get

{

bool tmp;

lock (m_Handle)

{

tmp = m_IsDone;

}

return tmp;

}

set

{

lock (m_Handle)

{

m_IsDone = value;

}

}

}

public virtual void Start()

{

m_Thread = new System.Threading.Thread(Run);

m_Thread.Start();

}

public virtual void Abort()

{

m_Thread.Abort();

}

protected virtual void ThreadFunction() { }

protected virtual void OnFinished() { }

public virtual bool Update()

{

if (IsDone)

{

OnFinished();

return true;

}

return false;

}

IEnumerator WaitFor()

{

while (!Update())

{

yield return null;

}

}

private void Run()

{

ThreadFunction();

IsDone = true;

}

}

Neural net handler class (to interact with Unity API):

public class BrainInterface : MonoBehaviour

{

EyeControl[] eyes;

JetControl[] jets;

Brain brain;

public float[] inputValues;

public float[] outputValues;

public float[] weights;

int inputNum, layerNum, neuronPerLayer, outputNum;

void Start()

{

Birth birth = gameObject.GetComponent<Birth>();

eyes = GetComponentsInChildren<EyeControl>();

jets = GetComponentsInChildren<JetControl>();

inputNum = eyes.Length;

layerNum = 1;

neuronPerLayer = 1;

outputNum = jets.Length;

// Debug.Log("output number is " + outputNum);

inputValues = new float[inputNum];

for(int i = 0; i < inputNum; i++)

{

inputValues[i] = 0;

}

outputValues = new float[outputNum];

for (int i = 0; i < outputNum; i++)

{

outputValues[i] = 0;

}

weights = birth.arWeights;

brain = new Brain(inputNum, layerNum, neuronPerLayer, outputNum, weights);

brain.inputVal = inputValues;

foreach(float f in inputValues)

{

Debug.Log(f);

}

brain.Start();

}

void Update()

{

// Debug.Log("reached");

if(brain != null)

{

// Debug.Log("brain is thinking");

if (brain.Update())

{

// Debug.Log("completed one think sesh");

brain.inputVal = inputValues;

outputValues = brain.output;

brain.Start();

}

}

for(int j = 0; j < jets.Length; j++)

{

jets[j].setSpeed(outputValues[j]);

}

}

Brain class (too big to put the whole thing so this is just the function that "thinks")

protected override void ThreadFunction()

{

think();

}

public void think()

{

// Debug.Log("in the think function");

// Debug.Log(inputVal.Length);

for(int i = 0; i < inputVal.Length; i++)

{

// Debug.Log("inputting value of " + inputVal[i]);

}

//get the new inputs and calculate the first layer

for(int neu = 0; neu < neuronsPerLayer; neu++)

{

// Debug.Log("now calculaing inputs for " + neu + " neuron");

for (int i = 0; i < inputVal.Length; i++)

{

midLayer[0, neu].sumInput(inputVal[i], i);

// yield return null;

}

midLayer[0, neu].calOutput();

midLayer[0, neu].clearInput();

// Debug.Log("neuron " + neu + " has an output of " + midLayer[0, neu].getOutput());

// yield return null;

}

//calculate all the middle layers (might be none)

for(int lay = 1; lay < layerNum; lay++)

{

// Debug.Log("now on " + lay + " layer");

for (int neu = 0; neu < neuronsPerLayer; neu++)

{

// Debug.Log("working on neuron " + neu);

for(int oP = 0; oP < neuronsPerLayer; oP++)

{

midLayer[lay, neu].sumInput(midLayer[lay - 1, oP].getOutput(), oP);

// yield return null;

}

midLayer[lay, neu].calOutput();

midLayer[lay, neu].clearInput();

// Debug.Log("neuron " + neu + " has an output of " + midLayer[lay, neu].getOutput());

// yield return null;

}

}

//calculate the outputs

// Debug.Log("now calculating the outputs");

for(int neu = 0; neu < outputNum; neu++)

{

for(int oP = 0; oP< neuronsPerLayer; oP++)

{

outLayer[neu].sumInput(midLayer[layerNum - 1, oP].getOutput(), oP);

// yield return null;

}

outLayer[neu].calOutput();

outLayer[neu].clearInput();

Debug.Log("neuron " + neu + " has an output of " + outLayer[neu].getOutput());

output[neu] = outLayer[neu].getOutput();

}

// Debug.Log("at the end of the thought");

}

Thanks in advance for your time!

Answer by Bunny83 · Jul 26, 2016 at 09:45 PM

Your neural network code most likely is not the bottleneck, especially with when you don't have large networks. Someone else recently had problems with an implementation and i created a NeuronalNetwork class. It does not use backpropagation or other learning methods, only a generational approach with random mutations. I can have at least 100+ objects and each has a net with 3 inputs, one middle layer with 5 neurons and 2 output neurons.

Make sure you remove ALL Debug.Logs from your code that is called frequently. Debug.Log is extremely slow. Especially inside the editor. In a build it's way better but still quite an impact. Not to mention that it will blow up your logfiles into several MBs very quickly.

Only use Debug.Log when you actually debug something or if you just want to log some initial values. You should only use it temporally in Update / frequently called code.

edit

Just opened my old test project and run a short test:

As you can see i have about 260 objects there and run on 380+ fps even there are many Debug.DrawLines and TrailRenderers. Also note that i run the neural network code in Update and not on a seperate thread. Doing one run through a network is actually so little work that using threads most likely won't improve anything. Actually having a lot tiny threads often do more harm then good. You also seem to use the "ThreadedJob" class, however it was ment for heavy one time tasks. Creating a new thread each frame is a very bad idea ^^. It creates tons of garbage and has a lot of overhead. The overhead is probably larger than the actual code ^^.

If you still have performance problems, try using the profiler in Unity to see where's the bottleneck.

ps: I was actually wrong ^^ i used a 3-5-5-2 network. I've made an editor window to visualize a network:

ps: How many inputs / outputs / layers / neurons per layer do you have?

Wow, that's really impressive! Thanks for the reply. It's supposed to be dynamic; the code I have checks how many inputs/outputs (eyes, jets, ect) the creature has when it's "born." I'll try to clean up the code and remove a bunch of the debugging stuff, as well as take a look at your neural net code. One (big?) difference between what I've got and your example here is that all the creatures are made of rigidbodies; they use physics to move through the world. Would that slow down my performance drastically? I'll try to smooth out the code, drop the threading, and try again. Will report back with results.

Well, I more or less took over that sample project which was linked in the other question question. The objects still have rigidbodies but they are kinematic. They are only used for detecting collisions with the food items (triggers). I don't think using Rigidbodies would have a huge impact. However it depends. If each RB will often collide with other RBs that's kind of bad. Or if they are somewhat trapped between others and they bump into each other all the time. At least 3d physics don't like that very well. 2d physics (which uses Box2D ins$$anonymous$$d of PhysX) can handle that case better.

Varying input count sounds a bit strange. How do you evolve your network then? Changing anything about the network structure would in most cases completely break the current achievements. Also the more inputs you have the more room there is for complete failures. For example in my example above the network has 3 inputs but only 1 is actually used. The others are taken into account as well, but they don't change. The objects only do a single raycast and the distance is used as input. A linear neural network can't "remember" a state. as the input just flows through to the output.

To give a neural network the ability to "remember" something it need some kind of "backlinking". See this article.

Implementing "eyes" with raycasts is an easy but poor implementation. An eye has a range that it covers. A raycast is only a single line. As soon as the object rotates slightly the raycast might miss the object it has seen before. raycast that goes sideways / at an angle are for example useless when it comes to hunting a target without some kind of memory / internal state. They can be helpful for collision avoidance though.

I should note that my NeuronalNetwork approach currently only links the layers in order. The links go strictly from the input --> layer1 --> layerX --> output. However the architecture does support loops / rings but those links have to be created manually.

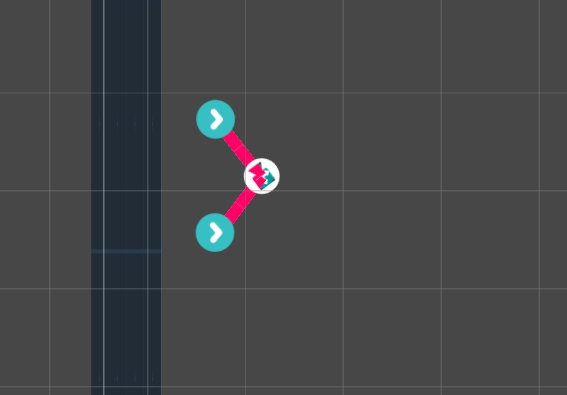

They do collide with other rigidbodies, which I might need to change now. The original idea of the project was basically to try to evolve virtual 2d machines that were constructed out of simple building blocks like rectangles and force appliers. For example, this is a creature that is constructed out of two "rods" (rectangles with rigidbodies and rectangle colliders), two "jets" (nodes that apply a certain amount of force), and one "eye" (a raycast that shoots every half second ten units in front of the organism):

The code I have now (which is more or less successfully integrated into the neuronal network example you provided, except for a few spots that I still need to add), scans the number of inputs (eyes and hopefully other sensors in the future, like color or pheromones) and the number of outputs (jets and others in the future such as the ability to grab onto things) and creates the neural network with that number of inputs and outputs. So this creature automatically creates a brain with one input and two outputs. That's why it's not set, because the creature could evolve a new eye or something and add an input, but if there is an evolutionary deterrent (it breaks the brain) in doing it that way I might need to go back to the drawing board. Genetics is put into a string of codons that consists of "0-3" (ex 0133011021302130013) which contain the building blocks for both the body and brain. They can thus be altered in the same ways that actual genetic code is altered, with deletions, swapping, and copies. The dream is to have a flexible set of building blocks to enable evolution of both body and $$anonymous$$d, and to have stable physics that allow them to move around and exhibit emergent behavior that marries these two together. But maybe I'm reaching too far.

PS Thank you so much for your help. I've been pouring over the neuronal network code and have already learned a lot more about how to program efficiently. I've basically googled every syntax bit that I don't understand and it's been really helpful.

Actually, would it be possible for you to explain the $$anonymous$$utate function in the Neuron class? I'm scratching my head at it, because it seems like it only does anything if the function is set to -1, in which case it randomizes all values. If the a$$anonymous$$utationRate value is set to anything else, it doesn't seem to do anything. Am I looking at it wrong?

As i said in the other answer where i posted my class i just took over that sample project. The mutation rate controls how "likely" a setting will get changed. $$anonymous$$utation rate has to be 2 or greater to have any effect. The lower the value is the more likely it will mutate. So 2 is the lowest setting in which case there's a 50% chance of each setting to be mutated.

-1 100%

2 50%

3 33.33%

4 25%

10 10%

20 5%

100 1%

1000 0.1%

The idea of the mutation rate is to increase it with each generation. So the further down a certain "genome" get's the less likely it mutates. As i stated in my other answer it's actually quite a bad approach to completely pick a new value. If a certain setting mutates it can break everything. In that case the generation simply dies and won't spread it's genes but that could even happen to quite advanced generations. It would be better to also adjust the "mutation amount". Of course pure settings like the activation function can only have a certain value but weight and the sigmoid base could be simply "adjusted" by adding / subtracting a small amount. That would better preserve the previous state.

That amount should be high at the beginning and should get lower in later generations (1f / mutationrate for example).

Also note that you should always have an additional input which you set fix to 1.0. That provides a permanent generic input. If you don't have such a bias neuron it's more likely that the agent does nothing. When all inputs are 0 you need at least one activation function that is Sigmoid or StepNP as they are the only ones which generate a non zero output if they get a zero input. With a bias neuron you get (based on it's weights of course) at least some input.

It of course also depends on what kinf of input your "sensors" provide.

Sorry for barging in here. I actually always used $$anonymous$$utationRate of 5, derp.

In my project, the sight or "eye" is a camera component, then I get the view bounds (frustrum?) of it. I use that to check the target's distance and 'centering', distance is just the AI's position $$anonymous$$us targets. Centering is a float value from 0 to 1. 0 being left side and 1 being the right side of camera view. This seems to work pretty well, but I don't know how it would work with multiple targets.

Your answer

Follow this Question

Related Questions

RecalculateNormals optimization 0 Answers

ECS: How to access to separate "groups" of same-archetype entities? 0 Answers

Unity compiles assets from previous builds into an empty scene? 2 Answers

Multiple scenes or one scene? 3 Answers

What- if anything- is wrong with this shader (alpha/reflective/unlit)? 1 Answer