- Home /

Can't find a way to downscale a 560x560 Texture2D / Image to a 28x28 resolution without losing lots of pixels

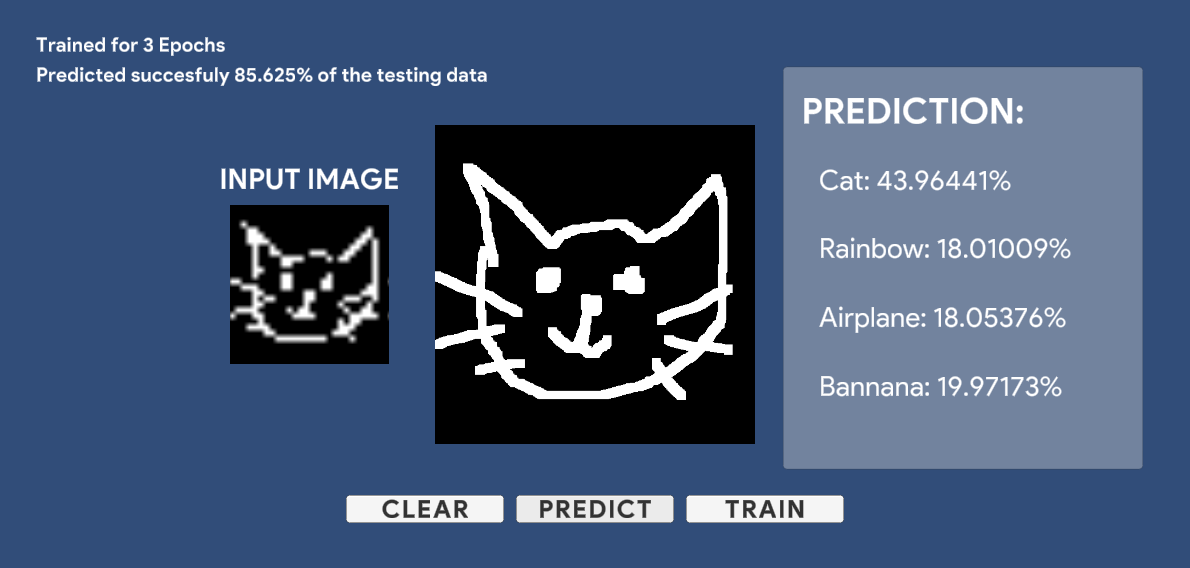

Over the past days I've developed a Doodle recognition application with a neural network library I wrote a while back, everything Is running fine now and I only need to be able to draw on top of a texture big enough for me to draw comfortably (580x580 works just fine for me), and then get the pixel data to feedforward it through my neural net library, the problem comes when I try to downscale the image because I lose too much data, the resulting image is just a bunch of points following the trace I draw on the bigger image and I don't know how to fix it.

I just want to be able to downscale the texture in a pixel-perfect way since I don't care about blockiness because it's the nature of the training data I used for the ML model.

Here's the script I've been using to downscale my image with no luck: https://pastebin.com/qkkhWs2J

I used @petersvp script

And here's the code triggered by a button once I finish my Doodle on the main texture:

public void GetDrawingData(){

ScalableTex = new Texture2D(28,28);

ScalableTex = TextureScale.scaled(DrawnTex,28,28,FilterMode.Point);

ScalableTex.Apply();

GetResult(ScalableTex.GetPixels().ColorToFloat());

targetSprite.GetComponent<SpriteRenderer>().sprite = Sprite.Create(ScalableTex,new Rect(0,0,ScalableTex.width,ScalableTex.height),new Vector2(0.5f,0.5f));

byte[] bytes = ScalableTex.EncodeToPNG();

File.WriteAllBytes(Application.dataPath + "/../a.png", bytes);

}

You asked for point sampling and you've got what you asked for.

Use Trilinear filtering instead. FilterMode.Trilinear

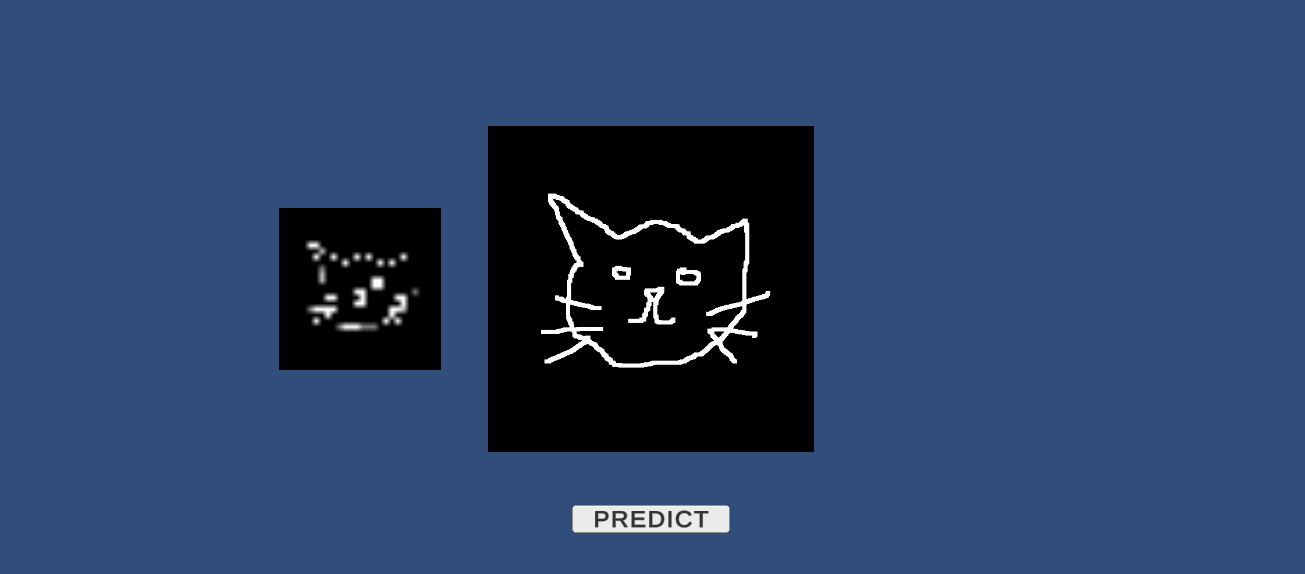

I changed it to FilterMode.Point just to test since leaving it on default didn't really help me either, sorry if I called your code wrongly I am no texture/image expert and thought the process of downscaling a 2D texture would be pretty straightforward. I've now changed it to FilterMode.Trilinear and got this:

Thanks for making such a helpful script btw, unity Texture2D.scale just gave me a grey pixel array, I read it just downscales the colour array and not actually the image itself.

Trilinear filtering requires that you build the mipmaps of the texture. I can't exactly remember how that was done though. Texture2d Apply maybe, Also 28x28 isn't the best size, try with 32x32? Right now I can't test it but I may be able to later today.

Trilinear filtering is unnecessary in this case as there are no mipmaps - it would be equivalent to bilinear filtering, and still wouldn't solve this issue (filtering after sampling won't fill in the information lost when downscaling via nearest neighbour directly from full-res to low-res).

He must use mipmaps for Trilinear downsampling. There was a texture2d ctor with bool for mipmaps

I've really tried every single thing I could find on this forum and on the internet about downscaling a texture in unity but unity just doesn't offer the functionality and my case is very specific where I need to downscale it so much, so it makes sense data is lost, but still I was hoping to be able to find an easy way to do it. The only thing I can think of to solve this is to call a resizing API to do the work for me.

I got my data from here, I don't think it should be this hard for me to downscale an image, is it?

Again try to use the Texture2d constructor that takes size and a boolean for mipmaps.

You're removing 99.8% of the pixels. You're going to suffer a lot of data loss.

That's the whole point, I want to lose data for my neural net to process it faster and it's the format the data came in anyway, but still I was expecting something more like this: https://imgur.com/a/cgLMqqX

Answer by Namey5 · Nov 21, 2021 at 11:47 AM

The reason this is occurring is because you are only sampling the full resolution texture once per low-res pixel. As a result, most of the pixels get skipped and you end up with an image that might be passable for some textures, but in your case not at all. Instead what you want to do is keep all of the data for every high resolution pixel and combine them all into the nearest low-res pixel in some way.

To do this 'accurately' you would need to find all of the full-res pixels that are covered by the area of the low-res pixel and average them (be conservative; full-res pixels should be shared if there isn't a 1-1 match). This requires a lot of manual work however - an optimised way to do this is to progressively downsample, i.e. rather than going straight from full-res -> low-res you can instead go from full-res -> half-res -> quarter-res -> ... -> desired-res:

public Texture2D FilteredDownscale (Texture2D a_Source, int a_NewWidth, int a_NewHeight)

{

// Keep the last active RT

RenderTexture _LastActiveRT = RenderTexture.active;

// Start by halving the source dimensions

int _Width = a_Source.width / 2;

int _Height = a_Source.height / 2;

// Cap to the target dimensions.

// This could be done with Mathf.Max() but that wouldn't take into account aspect ratio.

if (_Width < a_NewWidth || _Height < a_NewHeight)

{

_Width = a_NewWidth;

_Height = a_NewHeight;

}

// Create a temporary downscaled RT

RenderTexture _Tmp1 = RenderTexture.GetTemporary (_Width, _Height, 0, RenderTextureFormat.ARGB32);

// Copy the source into our temporary RT

Graphics.Blit (a_Source, _Tmp1);

// Loop until our target dimensions have been reached

while (_Width > a_NewWidth && _Height > a_NewHeight)

{

// Keep halving our current dimensions

_Width /= 2;

_Height /= 2;

// And match our target dimensions once small enough

if (_Width < a_NewWidth || _Height < a_NewHeight)

{

_Width = a_NewWidth;

_Height = a_NewHeight;

}

// Downscale again into a smaller RT

RenderTexture _Tmp2 = RenderTexture.GetTemporary (_Width, _Height, 0, RenderTextureFormat.ARGB32);

Graphics.Blit (_Tmp1, _Tmp2);

// Swap our temporary RTs and release the oldest one

(_Tmp1, _Tmp2) = (_Tmp2, _Tmp1);

RenderTexture.ReleaseTemporary (_Tmp2);

}

// At this point _Tmp1 should hold our fully downscaled image,

// so set it as the active RT

RenderTexture.active = _Tmp1;

// Create a new texture of the desired dimensions and copy our data into it

Texture2D _Tex = new Texture2D (a_NewWidth, a_NewHeight);

_Tex.ReadPixels (new Rect (0, 0, a_NewWidth, a_NewHeight), 0, 0);

_Tex.Apply ();

// Reset the active RT and release our last temporary copy

RenderTexture.active = _LastActiveRT;

RenderTexture.ReleaseTemporary (_Tmp1);

return _Tex;

}

The reason this works is because sampling at exactly half resolution with bilinear filtering will cause every low-res texture sample to land directly inbetween all 4 full-res neighbouring pixels - which are then averaged directly by the bilinear filtering.

This is actually brilliant, thank you very much for your answer, I ended up fixing it by increasing the width of the texture painter asset I was using, which also ended up favouring my neural net input with a much more clean input and data very similar to the training one. I'm sorry I didn't check your answer earlier I had homework to do and classes to take and left this project aside for a while. While increasing the width of the pen was enough for me I'll be marking your answer as correct since you put so much effort and time into it to help me, just as many other kind users here have done. I've taken a look at the script and it makes perfect sense to me, this might actually solve the problem for someone that is looking to downscale a texture as much as I needed to. Here's a screenshot of my little project now working btw :D

You basically made a multi pass bilinear downsampler that emulates Trilinear filtering in a way. By using your code and writing to texture mipmaps using RawTextureData the mipmaps I've got feeded on the original texture were much better looking in native Trilinear filter that the crappy mipmaps Unity generates with Apply(true) - thanks

From memory Unity's autogenerated mipmaps are internally handled by the GPU directly - so there might be some differences depending on your hardware, but at a base level the mipmaps are probably generated the same way (i.e. by feeding the previous mip into the next like this). Using trilinear filtering and mipmaps should generate pretty much the same image I would think.

Your answer