Camera depth causing RenderTexture issue

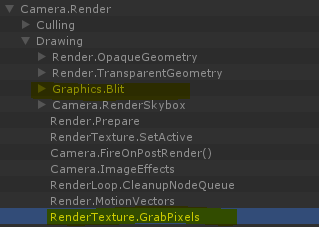

I've got a really strange issue I can't explain. I have a couple of cameras in my scene rendering different things. A camera for the main 3d scene, one for UI and one for an overlay etc. At one point in my app I change the depth of one of these cameras to -2. That puts that layer behind all my other layers. As soon as that happens my render stack changes  The highlighted items appear in the profiler but they weren't there before. When the depth is changed back they disappear again. It doesn't seem to matter which camera depth I set to -2, it causes the same issue. This wouldn't be a problem but for on iPad4 where the GrabPixels call takes up 94% of the CPU time. Any ideas what could be causing that? I can't find anything in my code that would be calling these functions. I'm, using Unity 5.6.2f1

The highlighted items appear in the profiler but they weren't there before. When the depth is changed back they disappear again. It doesn't seem to matter which camera depth I set to -2, it causes the same issue. This wouldn't be a problem but for on iPad4 where the GrabPixels call takes up 94% of the CPU time. Any ideas what could be causing that? I can't find anything in my code that would be calling these functions. I'm, using Unity 5.6.2f1

Your answer

Follow this Question

Related Questions

Shader normals modification different in build 1 Answer

Render to minimap on command 1 Answer

"Camera does not contain a definition for targetTexture" 0 Answers

Rotation around player. and Camera position, 2 Answers

CRT Shader how optimize?! 1 Answer