- Home /

The question is answered, right answer was accepted

Incorrect depth buffer in unlit shader when running on VR device

I have a problem with my depth buffer, which is correct when running in the Editor, but wrong when running on my Oculus Quest 2.

I want to visualize molecules with sets of spheres. To decrease the number of vertices I render quads instead of spheres. In an unlit shader (see below) I use the fragment shader to calculate the correct lighting per fragment and the correct depth value (ray-sphere intersection).

In the Editor everything works fine, here the depth buffer seems to range from 1 to 0. On my Oculus Quest 2 my spheres look right, but when I place them behind unity objects they are still visible. I assume this is because of my depth buffer. Until now I found out than the my depth buffer in VR ranges from 0 to 1.

Is there anything wrong with my depth buffer calculation or does anyone know how the depth buffer is calculated in VR for other objects, which use the standard shader?

I use MacOS, Unity 2020, Metal as Graphics API, Oculus Quest 2. Additionally I use GPU Instancing.

Thanks!

Fragment shader starts at line 74.

Shader "Custom/Ilusion"

{

Properties

{

_Color("Color", Color) = (0.5, 0.5, 0.5, 1)

_Radius("Radius", Float) = 0.25

}

SubShader

{

Tags { "RenderType"="Opaque" }

LOD 100

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#pragma multi_compile_instancing

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct v2f

{

float4 vertex : SV_POSITION;

float4 fragPos : TEXCOORD0;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct fragOutput {

fixed4 color : SV_Target;

float depth:SV_Depth;

};

float3 spherePos (float rad, float3 center, float3 origin, float3 dir)

{

dir = normalize(dir);

float3 o_c = origin - center;

float delta = pow(dot(dir, o_c), 2) - (pow(length(o_c), 2) - rad * rad);

float d = -(dot(dir, o_c));

if(delta < 0){

discard;

}

if(delta > 0){

d = min(d + sqrt(delta), d - sqrt(delta));

}

return origin + d * dir;

}

float4 _Color;

float _Radius;

v2f vert (appdata v)

{

v2f o;

UNITY_SETUP_INSTANCE_ID(v);

UNITY_TRANSFER_INSTANCE_ID(v, o);

o.fragPos = mul(unity_ObjectToWorld, v.vertex);

o.vertex = UnityObjectToClipPos(v.vertex);

return o;

}

fragOutput frag (v2f i)

{

UNITY_SETUP_INSTANCE_ID(i);

float4 ambient = float4(0.1, 0.1, 0.1, 0.0);

float3 origin = mul(unity_ObjectToWorld, float4(0.0,0.0,0.0,1.0)).xyz;

float3 camera = _WorldSpaceCameraPos;

float3 lightDir = normalize(_WorldSpaceLightPos0.xyz);

float3 ray = i.fragPos - camera;

// depth value calculation in world space

float3 sphereP = spherePos(_Radius, origin, camera, ray);

float3 nrm = normalize(sphereP - origin);

float lamb = max(0, dot(nrm, lightDir));

fixed4 col = _Color * lamb + ambient;

// world to clipposition

float4 pos = mul(UNITY_MATRIX_VP, float4(sphereP, 1.0));

fragOutput o;

o.color = col;

// dehomogenizing

o.depth = (pos.z / pos.w);

return o;

}

ENDCG

}

}

}

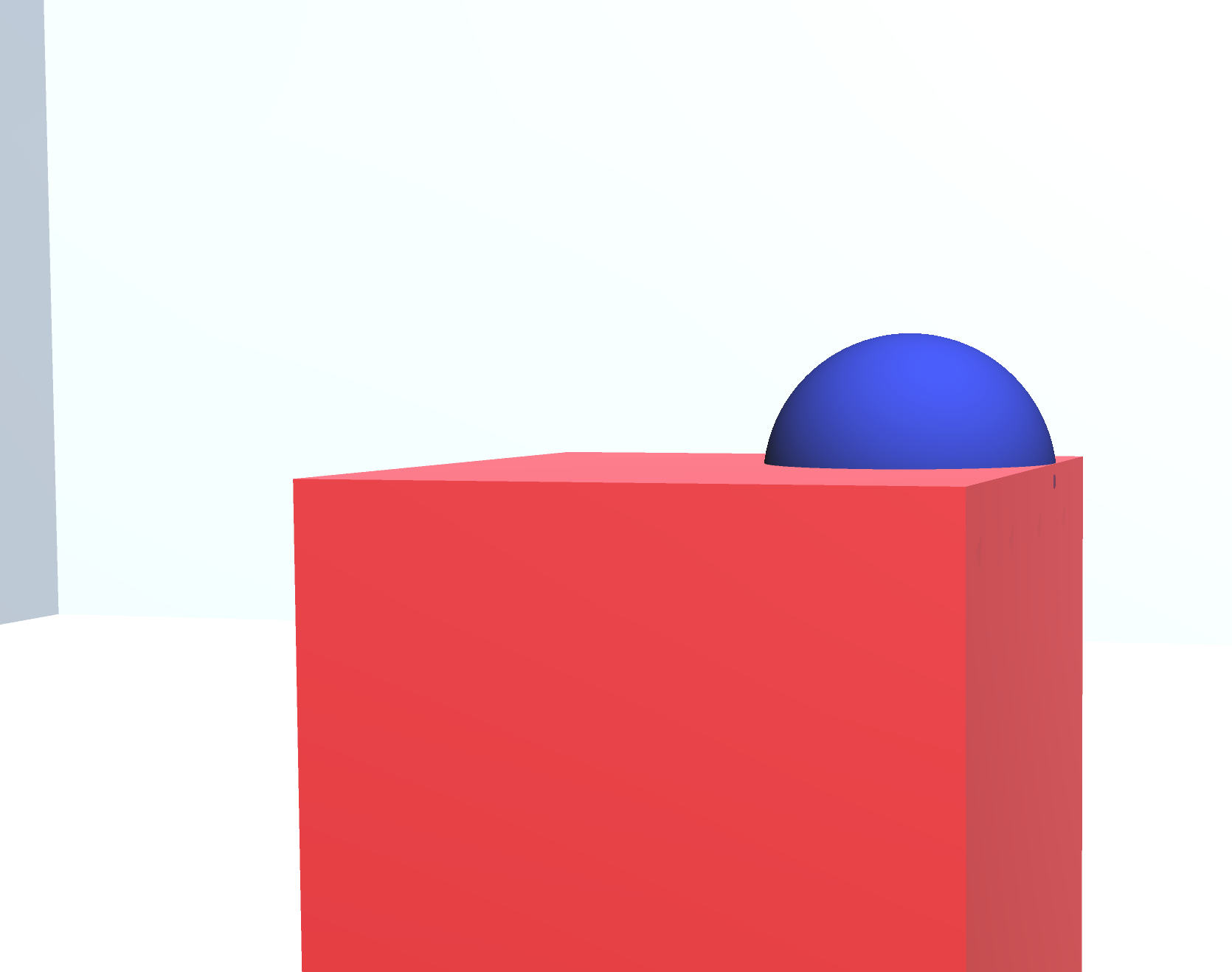

This is the correct image. In VR the sphere is fully visible, although it is actually half inside the cube.

Follow this Question

Related Questions

VR shader - unlit with dynamic reflections? 0 Answers

What water should I use for VR specifically a google cardboard game. 1 Answer

iPhone Shader - Unlit, 2 textures (alpha blend), Base needs tint. 1 Answer

Desaturating based on camera depth in an image effect? 0 Answers

Find out if an object is in front of a target in fragment shader? 0 Answers