- Home /

Accurate Pinch Zoom

Hi,

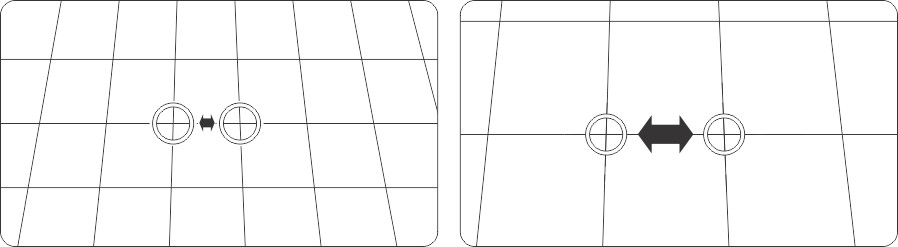

I'm trying to figure out how to create an accurate pinch zoom for my camera in Unity3D/C#. It must be based on the physical points on the terrain. The image below illustrates the effect I want to achieve.

The Camera is a child of a null which scales (between 0,1 and 1) to "zoom" as not to mess with the perspective of the camera.

So what I've come up with so far is that each finger must use a raycast to get the A & B points as well as the current scale of the camera parent.

EG: A (10,0,2), B (14,0,4), S (0.8,0.8,0.8) >> A (10,0,2), B (14,0,4), S (0.3,0.3,0.3)

The positions of the fingers will change but the hit.point values should remain the same by changing the scale.

BONUS: As a bonus, it would be great to have the camera zoom into a point between the fingers, not just the center.

Thanks so much for any help or reference.

EDIT: I've come up with this below so far but it's not accurate the way I want. It incorporates some of the ideas I had above and I think that the problem is that it shouldn't be /1000 but an equation including the current scale somehow.

if (Input.touchCount == 2) {

if (!CamZoom) {

CamZoom = true;

var rayA = Camera.main.ScreenPointToRay (Input.GetTouch (0).position);

var rayB = Camera.main.ScreenPointToRay (Input.GetTouch (1).position);

int layerMask = (1 << 8);

if (Physics.Raycast (rayA, out hit, 1500, layerMask)) {

PrevA = new Vector3 (hit.point.x, 0, hit.point.z);

Debug.Log ("PrevA: " + PrevA);

}

if (Physics.Raycast (rayB, out hit, 1500, layerMask)) {

PrevB = new Vector3 (hit.point.x, 0, hit.point.z);

Debug.Log ("PrevB: " + PrevB);

}

PrevDis = Vector3.Distance (PrevB, PrevA);

Debug.Log ("PrevDis: " + PrevDis);

PrevScaleV = new Vector3 (PrevScale, PrevScale, PrevScale);

PrevScale = this.transform.localScale.x;

Debug.Log ("PrevScale: " + PrevScale);

}

if (CamZoom) {

var rayA = Camera.main.ScreenPointToRay (Input.GetTouch (0).position);

var rayB = Camera.main.ScreenPointToRay (Input.GetTouch (1).position);

int layerMask = (1 << 8);

if (Physics.Raycast (rayA, out hit, 1500, layerMask)) {

NewA = new Vector3 (hit.point.x, 0, hit.point.z);

}

if (Physics.Raycast (rayB, out hit, 1500, layerMask)) {

NewB = new Vector3 (hit.point.x, 0, hit.point.z);

}

DeltaDis = PrevDis - (Vector3.Distance (NewB, NewA));

Debug.Log ("Delta: " + DeltaDis);

NewScale = PrevScale + (DeltaDis / 1000);

Debug.Log ("NewScale: " + NewScale);

NewScaleV = new Vector3 (NewScale, NewScale, NewScale);

this.transform.localScale = Vector3.Lerp(PrevScaleV,NewScaleV,Time.deltaTime);

PrevScaleV = NewScaleV;

CamAngle();

}

}

So, besides playing the What have you tried? card (or saying that you are not asking anything but stating that you are trying to figure out something), you almost have the answer yourself. You talk about the hit.point and scalling, so I suggest you take a look at using Quads, or plane.raycast.

I can get the variables I'm just not sure how to use them to apply the scale relative to the distance. I don't think that scaling by a value will get the accuracy I'm after. To have the points exactly under the fingers at all times. I could be wrong here. Just a bit confused here.

Hi 4t0m1c, have you found a way to solve your problem yet? I have been searching the internet for quite a while now and haven't found an accurate solution. $$anonymous$$y own approach isn't accurate enough either.

Answer by SteveLillis · Jun 10, 2017 at 09:56 AM

Intro

I had to solve this same problem recently and started off with the same approach as you, which is to think of it as though the user is interacting with the scene and we need to figure out where in the scene their fingers are and how they're moving and then invert those actions to reflect them in our camera.

However, what we're really trying to achieve is much simpler. We simply want the to user feel like the area of the screen that they are pinching changes size with the same ratio as their pinch changes.

Aim

First let's summarise our goal and constraints:

Goal: When a user pinches, the pinched area should appear to scale to match the pinch.

Constraint: We do not want to change the scale of any objects.

Constraint: Our camera is a perspective camera.

Constraint: We do not want to change the field of view on the camera.

Constraint: Our solution should be resolution/device independent.

With all that in mind, and given that we know that with a perspective camera objects appear larger when they're closer and smaller when they're further, it seems that the only solution for scaling what the user sees is to move the camera in/out from the scene.

Solution

In order to make the scene look larger at our focal point, we need to position the camera so that a cross-section of the camera's frustum at the focal point is equivalently smaller.

Here's a diagram to better explain:

The top half of the image is the "illusion" we want to achieve of making the area the user expands twice as big on screen. The bottom half of the image is how we need to move the camera to position the frustum in a way that gives that impression.

The question then becomes how far do I move the camera to achieve the desired cross-section?

For this, we can take advantage of the relationship between the frustum's height h at a distance d from the camera when the camera's field of view angle in degrees is θ.

Since our field of view angle θ is constant per our agreed constraints, we can see that h and d are linearly proportional.

This is useful to know because it means that any multiplication/division of h is equally reflected in d. Meaning we can just apply our multipliers directly to the distance, no extra calculation to convert height to distance required!

Implementation

So we finally get to the code.

First, we take the user's desired size change as a multiple of the previous distance between their fingers:

Touch touch0 = Input.GetTouch(0);

Touch touch1 = Input.GetTouch(1);

Vector2 prevTouchPosition0 = touch0.position - touch0.deltaPosition;

Vector2 prevTouchPosition1 = touch1.position - touch1.deltaPosition;

float touchDistance = (touch1.position - touch0.position).magnitude;

float prevTouchDistance = (prevTouchPosition1 - prevTouchPosition1).magnitude;

float touchChangeMultiplier = touchDistance / prevTouchDistance;

Now we know by how much the user wants to scale the area they're pinching, we can scale the camera's distance from its focal point by the opposite amount.

The focal point is the intersection of the camera's forward ray and the thing you're zooming in on. For the sake of a simple example, I'll just be using the origin as my focal point.

Vector3 focalPoint = Vector3.zero;

Vector3 direction = camera.transform.position - focalPoint;

float newDistance = direction.magnitude / touchChangeMultiplier;

camera.transform.position = newDistance * direction.normalized;

camera.transform.LookAt(focalPoint);

That's all there is to it.

Bonus

This answer is already very long. So to briefly answer your question about making the camera focus on where you're pinching:

When you first detect a 2 finger touch, store the screen position and related world position.

When zooming, move the camera to put the world position back at the same screen position.

Hi steve, This is so far one of the best answers I've come across, cheers for the great explanation.

I'm having a little trouble setting up the focal point, I've gone like this:

Vector3 position1 = Camera.main.ScreenToWorldPoint(new Vector3(touch0.position.x, touch0.position.y, transform.position.y));

Vector3 position2 = Camera.main.ScreenToWorldPoint(new Vector3(touch1.position.x, touch1.position.y, transform.position.y));

focalPoint = Vector3.Lerp(position1, position2, 0.5f);

That should be the mid point between the projections of the touches.

It kinda works, pinches and drags the camera precisely, but whenever I end touch and begin again in another location it moves back the camera to initial position. (0,500,0)

Any suggestions? Ty Alan

Your answer