- Home /

How do I combine normals on procedural mesh with multiple tiles?

I have a quad sphere that I'm generating but when using mesh.RecalculateNormals() I get noticeable seams along each face's edge. I realize that the reason why is because the face has no knowledge of the next triangle beyond its edges, but, is there a convenient way to solve this issue? Here's the code I use to generate my mesh vertices and triangles:

Vector3[] vertices = new Vector3[detail*detail];

Vector2[] uv1 = new Vector2[vertices.Length];

int[] triangles = new int[(detail - 1)*(detail - 1) * 6];

int triIndex = 0;

int leftDiv = subdivisions.currentSubLev - subdivisions.leftSubLev;

int rightDiv = subdivisions.currentSubLev - subdivisions.rightSubLev;

int upDiv = subdivisions.currentSubLev - subdivisions.upSubLev;

int downDiv = subdivisions.currentSubLev - subdivisions.downSubLev;

bool stitchLeft = leftDiv > 0;

bool stitchRight = rightDiv > 0;

bool stitchUp = upDiv > 0;

bool stitchDown = downDiv > 0;

for (int x = 0; x < detail; x++)

{

for (int y = 0; y < detail; y++)

{

int i = x + y * detail;

Vector2 percentage = StitchEdges(

detail,

leftDiv, rightDiv, upDiv, downDiv,

stitchLeft, stitchRight, stitchUp, stitchDown,

x, y);

Vector3 directionA = (percentage.x - 0.5f) * scale * 2f * axisA;

Vector3 directionB = (percentage.y - 0.5f) * scale * 2f * axisB;

Vector3 pointOnUnitCube = direction + directionA + directionB;

Vector3 pointOnUnitSphere = pointOnUnitCube.normalized;

Vector2 uvCoord = PlanetHelper.CartesianToPolarUv(pointOnUnitSphere);

float height = planet.GetHeight(pointOnUnitSphere, uvCoord);

Vector3 vertex = pointOnUnitSphere * (radius + height);

vertices[i] = vertex;

uv1[i] = uvCoord;

if (x != detail - 1 && y != detail - 1)

{

triangles[triIndex] = i;

triangles[triIndex + 1] = i + detail + 1;

triangles[triIndex + 2] = i + detail;

triangles[triIndex + 3] = i;

triangles[triIndex + 4] = i + 1;

triangles[triIndex + 5] = i + detail + 1;

triIndex += 6;

}

}

}

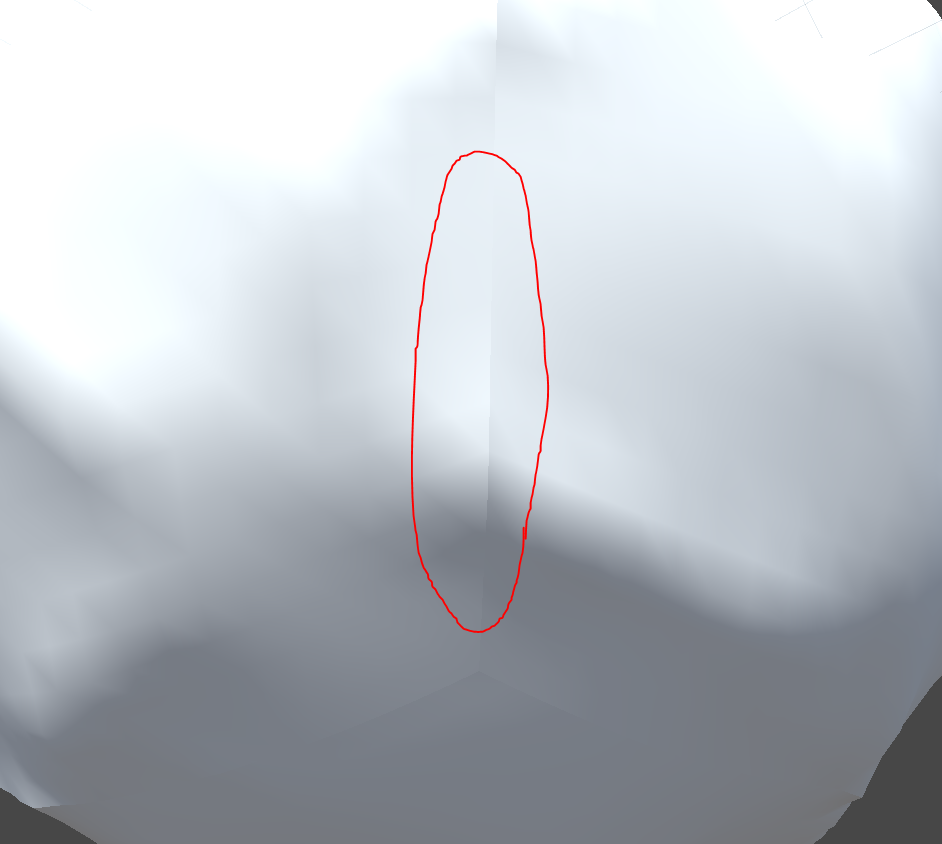

And here's an image that shows the problem with the edge seams:

Hopefully, someone knows of a way to fix this or has dealt with a similar issue? Thanks!

Answer by sxnorthrop · Oct 03, 2018 at 09:43 AM

I seemed to have solved this issue myself by following the steps listed in this tutorial.

Essentially what I did was build a vertex border around the edges of the mesh faces and used a set of "fake triangles" in order to calculate the normal of the vertex. These fake border vertices are placed in the exact location that they would be if they had been on the other face. This seems to work perfectly fine without any issues so far (and it's super fast).

Hello Could you share the code you implemented to solve the normals issue? Im new to unity and I managed to get a procedural sphere going but I am not sure how to implement qhats in that video.

Answer by Bunny83 · Oct 02, 2018 at 11:59 PM

Just don't use RecalculateNormals. The normals of a sphere are the most simplest normals possible. Just use your normalized vertex position as normal. Since the center of your sphere is 0,0,0 in local space, the actual coordinate of a vertex represents the normal direction. Since you already have the normalized direction (pointOnUnitSphere) just use that.

normals[i] = pointOnUnitSphere ;

edit

I just saw that you apply a height map displacement to the vertices. Of course in this case the vertex position doesn't necessarily represent the normal. In this case you have to calculate the normals yourself. The normal of a single vertex is just the normalized arithmetic mean of all surface normals at that point. The main issue here are duplicated / splitted vertices which you always have when you use UV coordinates since you need UV seams. So when calculating the normals you have to keep track of which vertices actually represents the same vertex. This could be done with a dictionary look up. So all the duplicated vertex indices may be remapped to a single one. That way we can process all triangles one by one, calculating the surface normal from the 3 corners and just add the normal to every corner.

There are generally two ways to do the mapping of duplicated vertices. Either you know how you generate your vertices so you know which one you duplicated and which one belongs together. The other way is to actually do a position-based matching. This usually has a time complexity of O(n²) since you have to check every vertex against every other vertex.

To store the matching results you could use a simple int array with the same size as the vertices array. Initialize all elements with "-1". If there's a duplicated vertex, just assign the smaller index to the element in the map with the higher index. So the lowest index is the actual vertex used and all the others just point to the lowest equals.

To create the normals array just initialize all normals with a zero vector. Iterate through all triangles. Calculate the normal of the triangle (normalized cross product between two edges) and add that calculated normal to each corner of the triangle. It's important to not just blindly use the vertex index from the triangle array but first check the map if it's a duplicated vertex (map has a value larger than "-1"). That way we only add the normals to the "first" two or more duplicated vertices. Once all triangles have been processed just iterate through all normals again. If the map is -1, just re-normalize the normal. If the map is 0 or greater read the normal from the mapped index and store it at the current index. That way all duplicated vertices get the same normal.

That seems like a really fast solution, but won't the first face have incorrect normals at (x:0 y:n) and (x:n, y:0)? That would only make sense because the map has not been initialized with the correct normal of its left neighbor. I feel like the best solution would be to generate some sort of vertex border along the edges of the mesh, but, that's much more complicated for my situation since I'm working with a sphere and the direction of each face is not the same.

No since it's a two step process ^^. First you iterate over all triangles and add up all face normals. Once done all the normals of the "primal" vertices should consist of the sum of all faces that meet at that vertex. Of course assu$$anonymous$$g you've done the mapping correctly. Once all normals have been added up we iterate once through the normals array just to normalize the vector sums and to distribute the normals to the shared vertices. The actual addition of the normals and the normalization and distributing has to be two seperate steps.

The addition step actually iterates over the triangles, one triangle at a time while the normalization / distributing step iterates just over the normals

I went ahead and did my border idea and it seems to be working. Thank you for answering though, yo. I'm almost certain this will help someone who's trying to do the same as me. There's a serious lack of information about this stuff online!

Your answer

Follow this Question

Related Questions

Prevent seams on a generated mesh with split edges. 0 Answers

Unity Mesh Rendering Issue 0 Answers

Holes in procedural mesh 0 Answers

What's wrong with this simple mesh manipulation? 1 Answer

how to calculate this? 2 Answers