Scale a canvas based on reference camera viewport

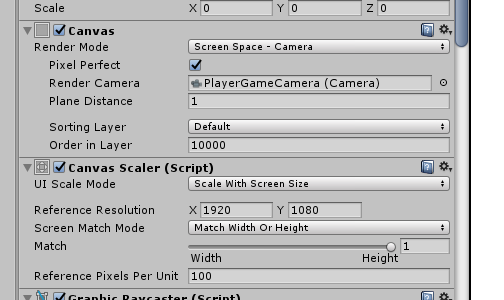

I have a split screen game that spawns a camera and a canvas based on the number of players (from 1 to 4). This is what my Canvas Inspector looks like:

When I run the game and spawn more than 1 camera + canvas, they get displayed normally, but are not scaled properly. For example, since the width of the viewport is smaller, the HP and the Energy bars on the top overlap each other, even though they are anchored in the top-left and top-right corner of the screen.

The UI elements are designed for a single 1920x1080 view. I expected it to scale down accordingly when I split the view but it doesn't seem to do that.

What settings do I need to change so that it scales based on camera viewport size? Or do I need to manually resize the canvas by code when in split-screen mode?

I found this which might do the trick (the setVertices part):

Answer by Ideka · Aug 04, 2017 at 04:19 AM

I just had to make a component that does the trick. Use it instead of the default CanvasScaler.

I inherited from the default CanvasScaler and added lines 20 to 24 in the overriden HandleScaleWithScreenSize.

using UnityEngine;

using UnityEngine.UI;

public class CameraCanvasScaler : CanvasScaler

{

// The log base doesn't have any influence on the results whatsoever, as long as the same base is used everywhere.

public const float kLogBase = 2;

private Canvas m_Canvas;

protected override void OnEnable()

{

m_Canvas = GetComponent<Canvas>();

base.OnEnable();

}

protected override void HandleScaleWithScreenSize()

{

Vector2 screenSize = new Vector2(Screen.width, Screen.height);

if (m_Canvas.renderMode == RenderMode.ScreenSpaceCamera && m_Canvas.worldCamera != null)

{

screenSize.x *= m_Canvas.worldCamera.rect.width;

screenSize.y *= m_Canvas.worldCamera.rect.height;

}

float scaleFactor = 0;

switch (m_ScreenMatchMode)

{

case ScreenMatchMode.MatchWidthOrHeight:

{

// We take the log of the relative width and height before taking the average.

// Then we transform it back in the original space.

// the reason to transform in and out of logarithmic space is to have better behavior.

// If one axis has twice resolution and the other has half, it should even out if widthOrHeight value is at 0.5.

// In normal space the average would be (0.5 + 2) / 2 = 1.25

// In logarithmic space the average is (-1 + 1) / 2 = 0

float logWidth = Mathf.Log(screenSize.x / m_ReferenceResolution.x, kLogBase);

float logHeight = Mathf.Log(screenSize.y / m_ReferenceResolution.y, kLogBase);

float logWeightedAverage = Mathf.Lerp(logWidth, logHeight, m_MatchWidthOrHeight);

scaleFactor = Mathf.Pow(kLogBase, logWeightedAverage);

break;

}

case ScreenMatchMode.Expand:

{

scaleFactor = Mathf.Min(screenSize.x / m_ReferenceResolution.x, screenSize.y / m_ReferenceResolution.y);

break;

}

case ScreenMatchMode.Shrink:

{

scaleFactor = Mathf.Max(screenSize.x / m_ReferenceResolution.x, screenSize.y / m_ReferenceResolution.y);

break;

}

}

SetScaleFactor(scaleFactor);

SetReferencePixelsPerUnit(m_ReferencePixelsPerUnit);

}

}

Your answer