mimicking movement of one object to another with different scale/location.

G'day all,

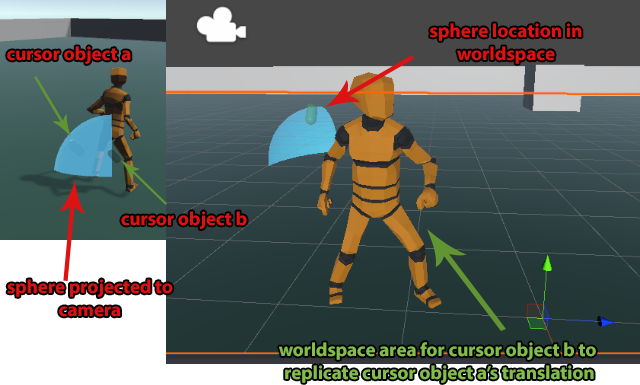

I'm trying to 'mimick' or 'replicate' the movement of a gameobject controlled by mouse input, to a gameobject in the worldspace that is attached to the player.

I'm currently using a raycast to a sphere mesh to position a gameobject (cursorObjA) at a point on the sphere, as part of the user interface for my player control. This setup exists in the scene in a random spot, with a camera aimed at it so I can use it as an interface without it affecting the game world (and running into mesh collision errors).

What I want to do is get that position of the object, and use it to guide an IK point(cursorObjB) for the player's model, to guide the positioning of the hand/arm/body. When cursorObjA moves around the sphere, I want that same movement for ObjB, but upscaled to match the player mesh space.

image above for reference of what's going on. I currently have the cursor system working but I am stuck trying to figure out how to pass the information through to object b, and constrain it so it mimicks the player's input. I've tried a few times using local rotation, with and without multipliers, and even tried to physically position the objects at the same point but couldn't get it to work properly.

The prime values I need to pass is the position, and the rotation on the z axis.

Does anyone know what methods or api functions I should be looking at to do this properly?

Answer by streeetwalker · Sep 23, 2020 at 04:26 AM

KInd of like Telepresence - cool idea!

If you convert your mouse position on the spherical section to spherical coordinates, you could then apply those to another sphere of any size any location you want. Once you pick the target sphere radius and location, you can convert them back to Cartesian coordinates to apply to your hand. If you need to rotate the target sphere to match the local orientation of the target, I guess you'd to that before you convert back. The equations to do all this are out there on the web.

If it is more convenient to use the rotation with respect to z, that is already a form of spherical coordinate. You just need to rotate that with respect to the local orientation of the target. I believe to do that, you can to get the rotation with respect to Z as a quaternion, and then multiply that by the local rotation of the target - something like that!

Hi thanks for the response, have to wrap my head around it as the calculations behind it aren't my strong suit. I currently have the z rotate to what appears to be working, which is neat, just struggling to get the positioning correct. I was hoping to do away with a second sphere but it might actually be the way to go, simply match a co-ordinate position rather than a position in 3d space. Do you think that would be more or less resource intensive?

sorry, i had to refresh my memory: It seems you already have half a spherical coordinate in th representation of your mouse position as a rotation with respect to z. If you can also describe the point as a rotation from a 2nd axis, then you I think you have everything you need to transform it to your hand/arm position.

I've done something like what you are doing in a similar context, but it has been a while. The math isn't that difficult: https://mathinsight.org/spherical_coordinates

Your answer